Tech Tent: Fake videos stir distrust

- Published

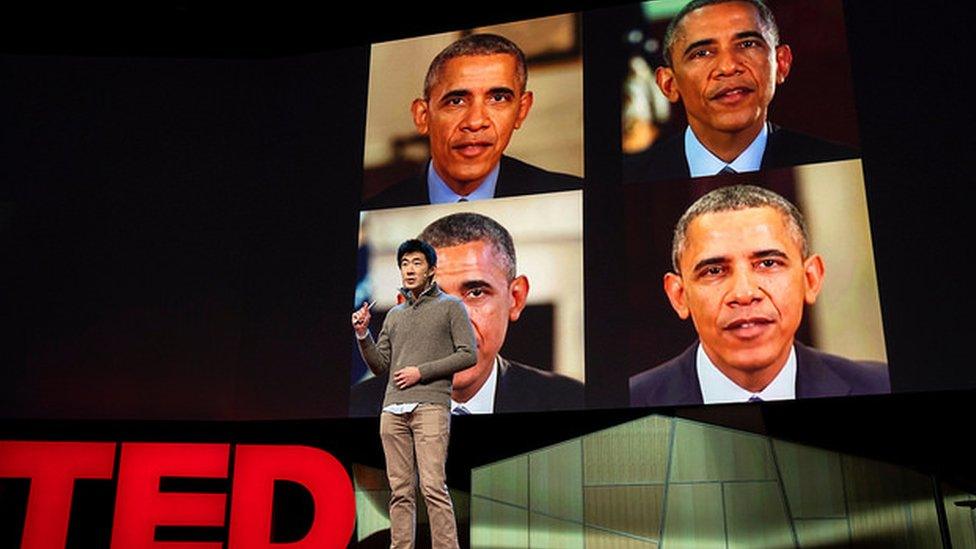

At the TED conference, Google engineer Supasorn Suwajanakorn demonstrated the AI-faked video

We have already had to wake up to fake news - will we soon have to stop trusting video and audio news too as artificial intelligence makes it easier to create convincing fakes?

On Tech Tent this week we look at the advances in technology which allow you to get anybody to say anything, and explore their implications.

It was a video featuring Barack Obama talking about a character from the hit movie Black Panther, and then making a crude remark about Donald Trump, which raised new concerns about this subject. It was not of course genuine - the actor Jordan Peele's voice had been synced to the lips of the former US president.

Stream or download, external the latest Tech Tent podcast

Listen live every Friday at 15.00 GMT on the BBC World Service

The video was created by Buzzfeed, external using software called FakeApp, a freely available tool which has also been used to superimpose the faces of celebrities into porn videos. The exercise was designed to get people thinking about the threat posed by these techniques,

Prof Dame Wendy Hall, one of the authors of a UK government report on artificial intelligence, explains what is making this fakery possible: "It's what's happening generally with AI - the development of new techniques, deep learning algorithm techniques that can learn from data, in this case video data." She says this is "quite scary".

Lots of companies are working on this kind of technology including the Canadian start-up Lyrebird which says it can create a digital copy of anyone's voice from just a minute-long sample of their speech.

I tried this out using the demo on their website and it did not produce a particularly convincing version of my voice, external - though the firm insists with a lot more data and the latest version of its technology it can achieve something much better.

When I called up one of Lyrebird's founders, Jose Sotelo, he explained the potential use of this technology to enable people with conditions like motor neurone disease to have a realistic digital voice after it became impossible for them to speak. He said he was aware that it might be possible to use it for fakery but was confident that the company had put in safeguards to stop that happening.

But the technology is rapidly improving - and a recent report on the malicious use of AI, external lists fakery of this kind as major worry. "It's one among many signs that people should be cautious about what they believe and whether they believe what they read and see," said Miles Brundage, one of the report's authors. "It's a challenge to the seeing is believing idea, especially in the context of motivated actors who want to sow confusion and stir up controversy."

The Deepfake phenomenon started with putting famous faces on the bodies of porn stars

It was another video demonstration, again using Mr Obama as a subject, which kick-started this whole debate last year. University of Washington researchers demonstrated the use of machine learning techniques to match speech to lip movements, external.

One of the academics, Supasorn Suwajanakorn, downplays how likely it is that this technology will be used for malicious ends: "Does it make sense to go all the way to create fake videos to use in fake news when you can just make up text?" he asks. "A video contains 10,000 frames and you can detect one frame that looks wrong and then you can say for sure the video is fake."

You might also think that AI techniques could be used to spot fakes, but Dame Wendy is not too hopeful, saying: "It's much harder to create the technology to detect this than it is to develop the technology to make it happen. I don't think there are purely technical solutions to this."

She thinks the solution lies in all of us being more sceptical about the sources of online information. What is clear is that new propaganda weapons are emerging from the AI labs and we are all going to need to be more vigilant about what is real and what is fake.

Stream or download, external the latest Tech Tent podcast

Listen live every Friday at 15.00 GMT on the BBC World Service

- Published13 April 2018

- Published1 March 2018

- Published7 February 2018