Reddit bans deepfake porn videos

- Published

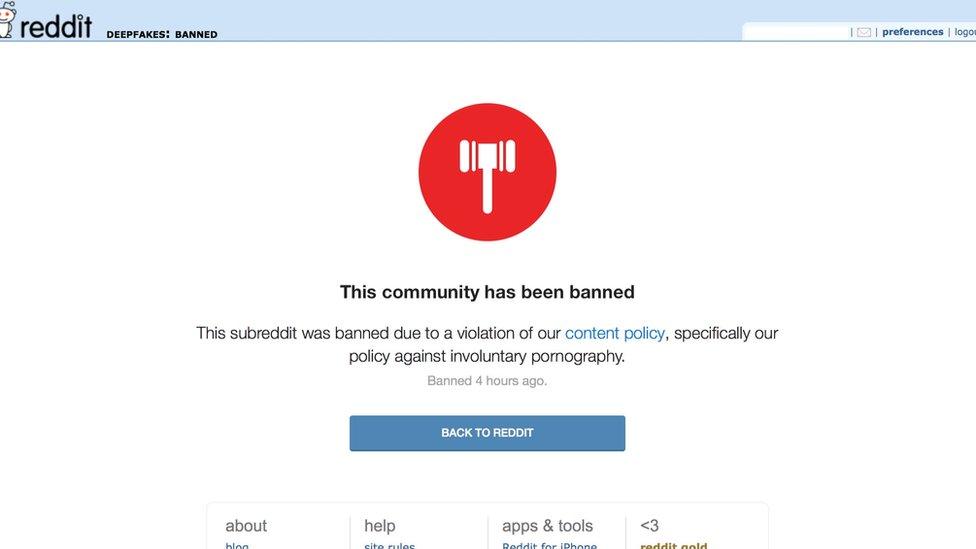

Reddit has blocked all content in affected forums, including posts that did not contain images

Reddit has banned "fake porn" - imagery and videos that superimpose a subject's face over an explicit photo or video without the person's permission.

The move follows, external the development of artificial intelligence software that made it relatively easy to create clips featuring computer-generated versions of celebrities' faces.

Reddit had become one of the most popular places to share and discuss so-called deepfake videos.

It also reworded its policy for minors, external.

The discussion site had been under growing pressure to act after other platforms - including Twitter, Gfycat and Pornhub - introduced their own deepfake bans.

This image of Natalie Portman was computer-generated from hundreds of stills and featured in an explicit video

However, it may cause unease among some Reddit users who already feared the platform was becoming less "open" after its closure of two alt-right forums in 2017.

The move coincided with an announcement that Reddit's co-founder Alexis Ohanian, external was stepping aside from a hands-on role and would instead dedicate most of his time to a venture capital firm.

He did not comment on the new policies but told the Wall Street Journal, external that he intended to focus on opportunities related to emerging technologies.

"There are clearly things around security and privacy and identity that are going to be dramatically changed through technology, just because it's now possible," he said.

Mr Ohanian will remain a Reddit board member.

What are deepfakes?

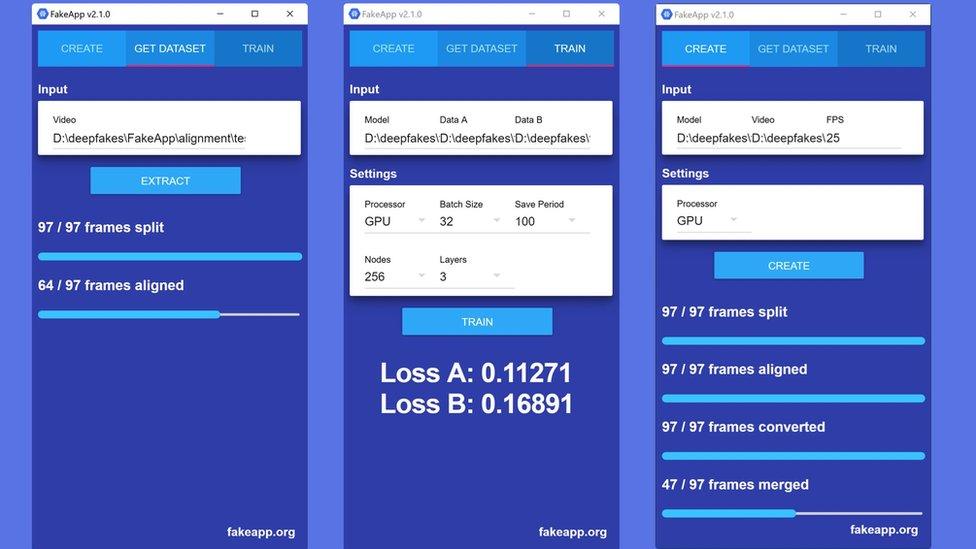

Deepfakes involve the use of artificial intelligence software to create a computer-generated version of a subject's face that closely matches the original expressions of another person in a video.

Several deepfake clips had been made to resemble the actress Gal Gadot

The algorithm involved is believed to have been developed last year.

To work it requires a selection of photos of the subject's face taken from different angles - about 500 different images are suggested for a good result - and a video clip to merge the results with.

The practice was brought to the wider public's attention in December, when the news site Motherboard reported how it had been used to create fake videos of the Wonder Woman actress Gal Gadot.

But it became much more common after January's release of a software tool called FakeApp, which made it possible to carry out the process with a single button press.

FakeApp made it easier for users to make use of the face-swapping algorithm

Not all of the clips generated have been pornographic in nature - many feature spoofs of US President Donald Trump, and one user has specialised in placing his wife's face in Hollywood film scenes.

But much of the material generated has merged female pop singers and actresses' faces with footage of women engaged in sexual acts.

Reddit's new involuntary pornography policy states that it "prohibits the dissemination of images or video depicting any person in a state of nudity or engaged in any act of sexual conduct apparently created or posted without their permission, including depictions that have been faked".

The Deepfakes subreddit - which had more than 91,000 subscribers - was among the first forums to be deleted after its publication.

But the site has also taken down the Celebfakes forum, whose focus was faked static images. It had been in existence for about seven years.

Allow YouTube content?

This article contains content provided by Google YouTube. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read Google’s cookie policy, external and privacy policy, external before accepting. To view this content choose ‘accept and continue’.

In recent days, there had been concern among the Deepfakes community itself that some users had been using the technology to create clips that featured the faces of under-16s - in effect child abuse imagery.

Reddit has also clarified its policy over this practice.

"Reddit prohibits any sexual or suggestive content involving minors or someone who appears to be a minor," it said.

"Depending on the context, this can in some cases include depictions of minors that are fully clothed and not engaged in overtly sexual acts. If you are unsure about a piece of content involving a minor or someone who appears to be a minor, do not post it."

Reddit reaction

Reddit still allows posts featuring non-explicit videos created by the FakeApp software, and a forum dedicated to the tool itself remains online.

But comments by its users indicate they are split over its decision to ban the other material.

"Good. It was empathetically and morally bankrupt trash," wrote one users.

Another added: "So many people think Reddit is crossing the line and going too far with this when they should be crossing the line... You don't want to be anywhere remotely close to this line. No company should be faulted for wanting a sizable buffer between them and child pornography," wrote one user.

But someone else suggested the move would backfire: "You realise that this precedent sets you up for an eventual removal of all not-safe-for-work content... You have no way to make sure that NSFW content adheres to your new standards or not. This is going to be seen as a mistake in time."

Others suggested that the move would merely drive pornographic deepfakes to other sites more willing to tolerate them.

"They're not going away just because the Reddit admins threw a tantrum," wrote one.

- Published7 February 2018