YouTube publishes deleted videos report

- Published

- comments

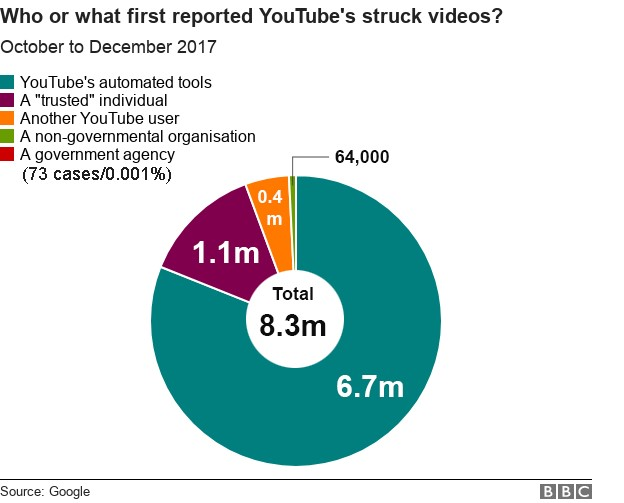

YouTube's first three-monthly "enforcement report" reveals the website deleted 8.3 million videos between October and December 2017 for breaching its community guidelines.

The figure does not include videos removed for copyright or legal reasons.

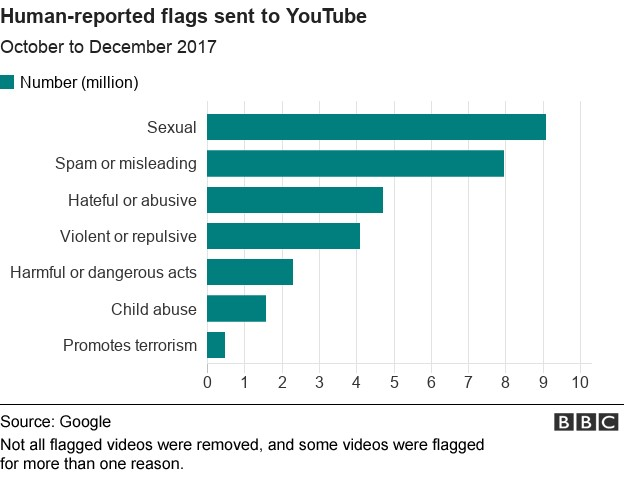

Sexually explicit videos attracted 9.1 million reports from the website's users, while 4.7 million were reported for hateful or abusive content.

Most complaints came from India, the US or Brazil.

YouTube said its algorithms had flagged 6.7 million videos that had then been sent to human moderators and deleted.

Of those, 76% had not been watched on YouTube, other than by the moderators.

The company told the BBC it stored a data "fingerprint" of deleted videos so that it could immediately spot if somebody uploaded the same video again.

In March, YouTube was criticised for its failure to remove four propaganda videos posted by the banned UK neo-Nazi group National Action.

Giving evidence to the UK's Home Affairs Committee, the company's counter-terrorism chief, William McCants, blamed human error for the delay in removing the videos.

But Yvette Cooper MP said the evidence given was "disappointing", "weak" and looked like "a failure to even do the basics".

The company has also been criticised for using algorithms to curate its YouTube Kids app for children. Inappropriate videos have repeatedly slipped through the net and appeared on YouTube Kids.

YouTube will let people track their reports

The report does not reveal how many inappropriate videos had been reported or removed from YouTube Kids.

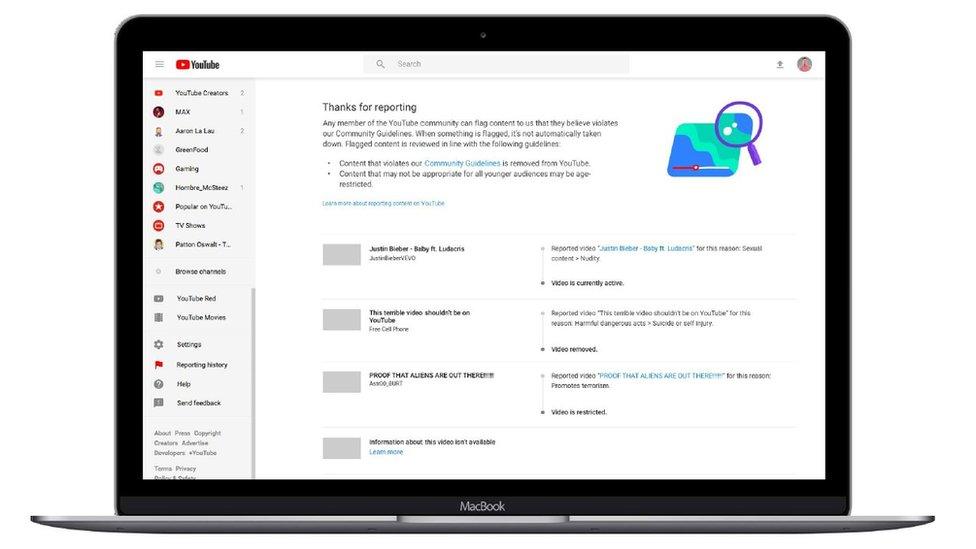

YouTube also announced the addition of a "reporting dashboard" to users' accounts, to let them see the status of any videos they had reported as inappropriate.

Top 10 countries flagging videos

India

US

Brazil

Russia

Germany

UK

Mexico

Turkey

Indonesia

Saudi Arabia

- Published13 March 2018