IBM launches tool aimed at detecting AI bias

- Published

Algorithms and machine-learning could increasingly be used to calculate things like car insurance

IBM is launching a tool which will analyse how and why algorithms make decisions in real time.

The Fairness 360 Kit will also scan for signs of bias and recommend adjustments.

There is increasing concern that algorithms used by both tech giants and other firms are not always fair in their decision-making.

For example, in the past, image recognition systems have failed to identify non-white faces.

However, as they increasingly make automated decisions about a wide variety of issues such as policing, insurance and what information people see online, the implications of their recommendations become broader.

Often algorithms operate within what is known as a "black box" - meaning their owners can't see how they are making decisions.

The IBM cloud-based software will be open-source, and will work with a variety of commonly used frameworks for building algorithms.

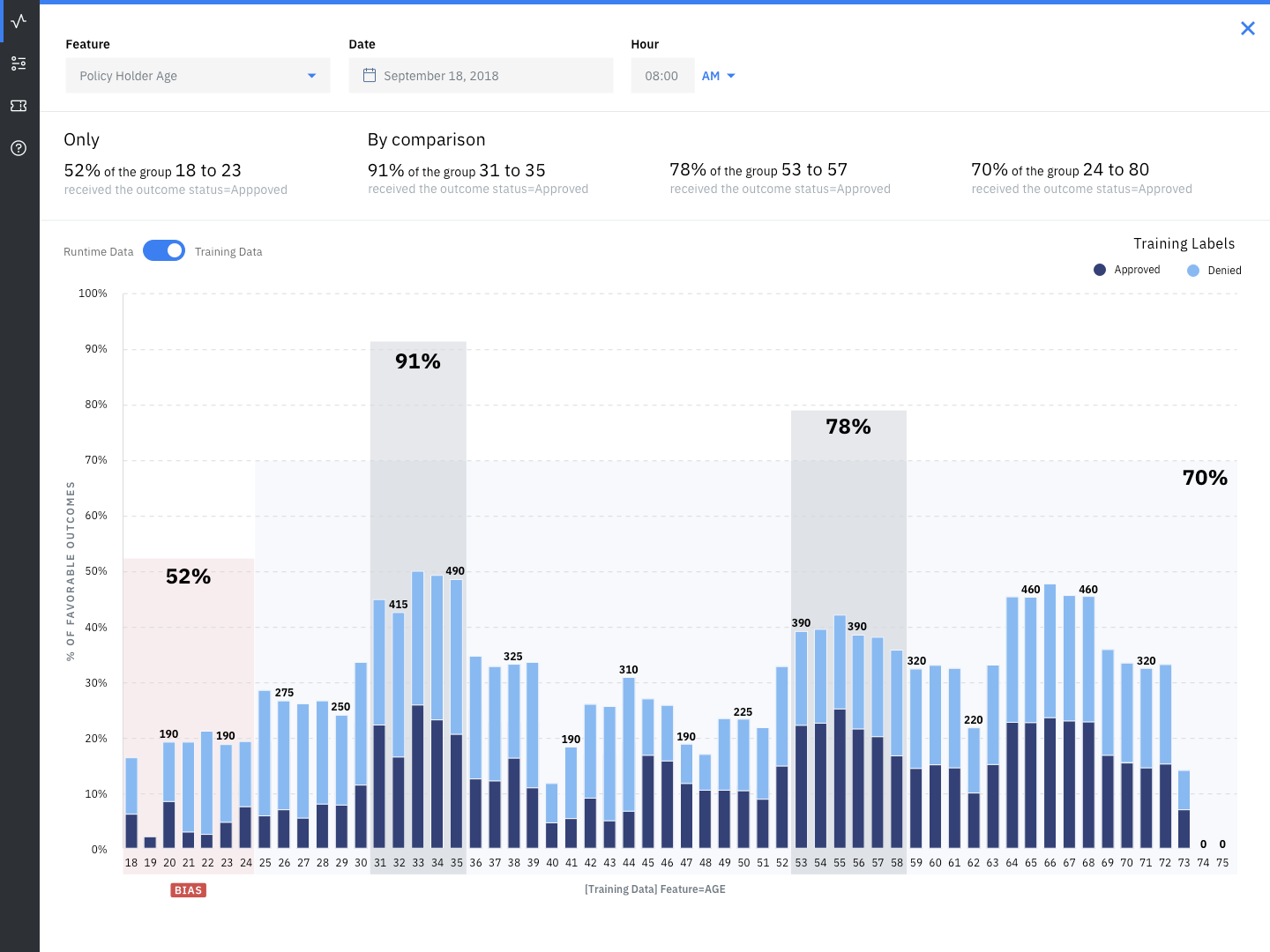

Customers will be able to see, via a visual dashboard, how their algorithms are making decisions and which factors are being used in making the final recommendations.

An example of the IBM dashboard

It will also track the model's record for accuracy, performance and fairness over time.

"We are giving new transparency and control to the businesses who use AI and face the most potential risk from any flawed decision-making," said David Kenny, senior vice president of Cognitive Solutions.

Bias tools

Other tech firms are also working on solutions.

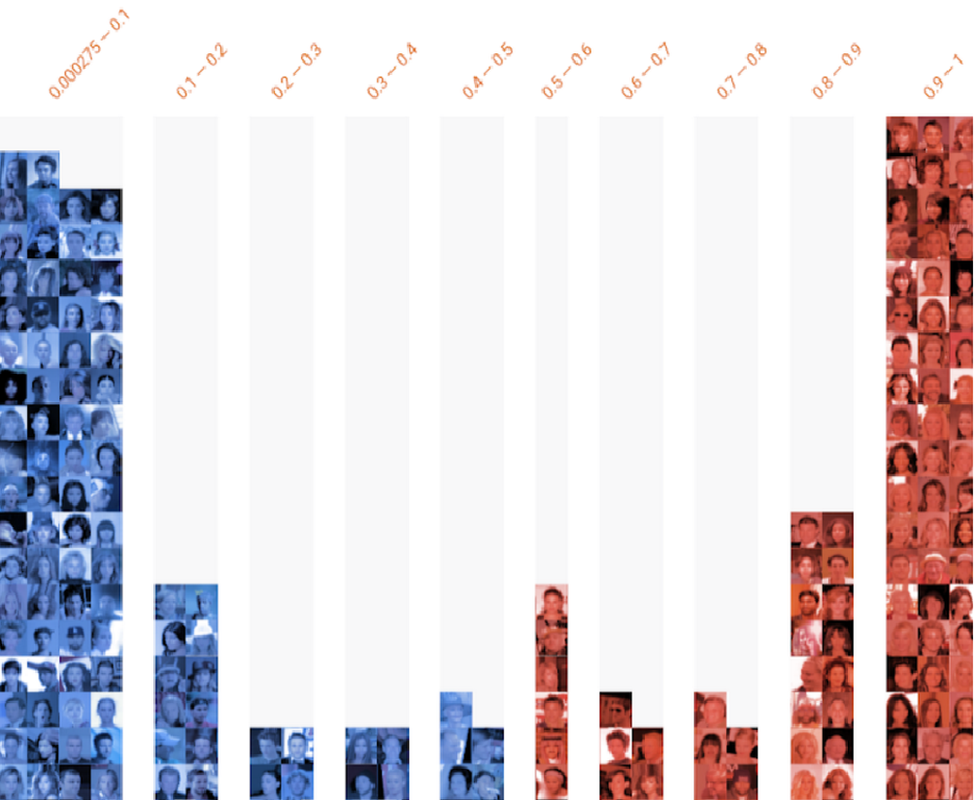

Last week, Google launched a "what-if" tool, external, also designed to help users look at how their machine-learning models are working.

However, Google's tool does not operate in real time - the data can be used to build up a picture over time.

An example of Google's What-If dashboard, showing faces identified as non-smiling in blue, and smiling in red, over time

Machine-learning and algorithmic, bias is becoming a significant issue in the AI community.

Microsoft said in May that it was working on a bias detection toolkit, external and Facebook has also said it is testing a tool to help it determine whether an algorithm is biased, external.

Part of the problem is that the vast amounts of data algorithms are trained on is not always sufficiently diverse.

Joy Buolamwini found her computer system recognised the white mask, but not her face.

Joy Buolamwini launched the Algorithmic Justice League, external (AJL) while a postgraduate student at the Massachusetts Institute of Technology in 2016 after discovering that facial recognition only spotted her face if she wore a white mask.

And Google said it was "appalled and genuinely sorry" when its photo algorithm was discovered to be incorrectly identifying African-Americans as gorillas in 2015.

In 2017, the UK police were warned by human rights group Liberty about relying on algorithms to decide whether to keep an offender in prison based purely on their age, gender and postcode.

There is a growing debate surrounding artificial intelligence and ethics, said Kay Firth-Butterfield from the World Economic Forum.

"As a lawyer, some of the accountability questions of how do we find out what made [an] algorithm go wrong are going to be really interesting," she said in a recent interview with CNBC, external.

"When we're talking about bias we are worrying first of all about the focus of the people who are creating the algorithms and so that's where we get the young white people, white men mainly, so we need to make the industry much more diverse in the West."

- Published8 September 2016

- Published27 August 2016

- Published14 April 2017

- Published16 November 2017

- Published6 September 2018

- Published24 November 2016