Britain's 'bullied' chatbots fight back

- Published

Chatbots are being programmed to respond to abusive messages

UK chatbot companies are programming their creations to deal with messages containing swearing, rudeness and sexism, BBC News has learned.

Chatbots have received thousands of antisocial messages over the past year.

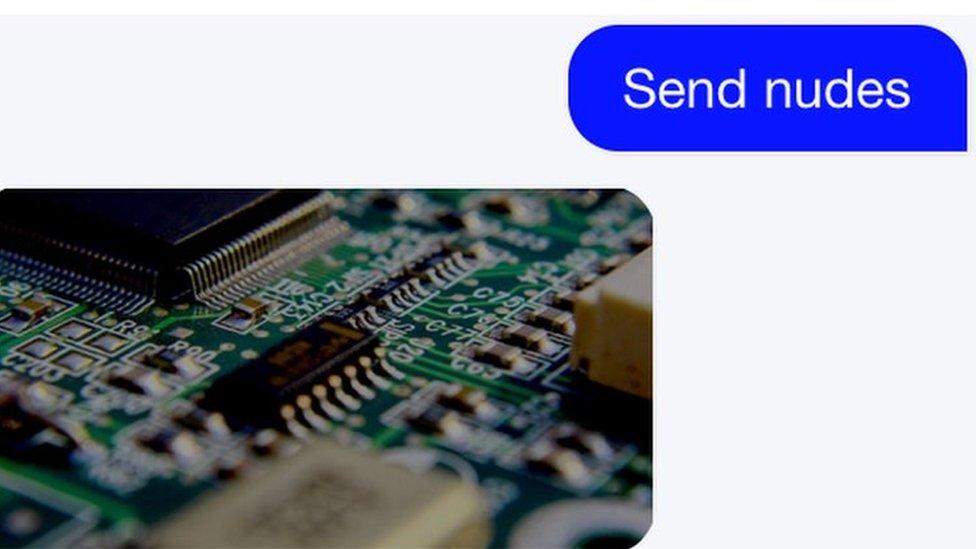

One financial chatbot has been asked out on a date nearly 2,000 times and to "send nude [picture]s" more than 1,000, according to its makers, Cleo AI.

The chatbot now responds to the request by sending an image of a circuit board.

Because chatbots reply automatically, they are almost always available

Cleo's chatbots now also

respond to "Make me a sandwich" with repeated images of sandwiches

respond to potentially homophobic messages with: "The complexities of human sexuality are rather beyond me, at present" along with an emoji of a rainbow.

Cleo's lead writer, Harriet Smith Hughes, told BBC News "The usual protocol is to rebut gendered abuse with gifs from strong, outspoken female figures - Meryl Streep, [Star Trek's] Capt Kathryn Janeway, Ripley in Alien.

"Some responses are harsher: screenshots from feminist horror film Teeth is always good."

Some chatbots have specific responses ready for antisocial messages

Paul Shepherd, of We Build Bots, external, told BBC News its chatbots had seen their "fair share of abuse".

But those in retail services had three times as many critical or abusive messages (3%).

"We think this is because there's often a physical purchase involved and people take it out on the bot when a delivery is late or wrong," he told BBC News.

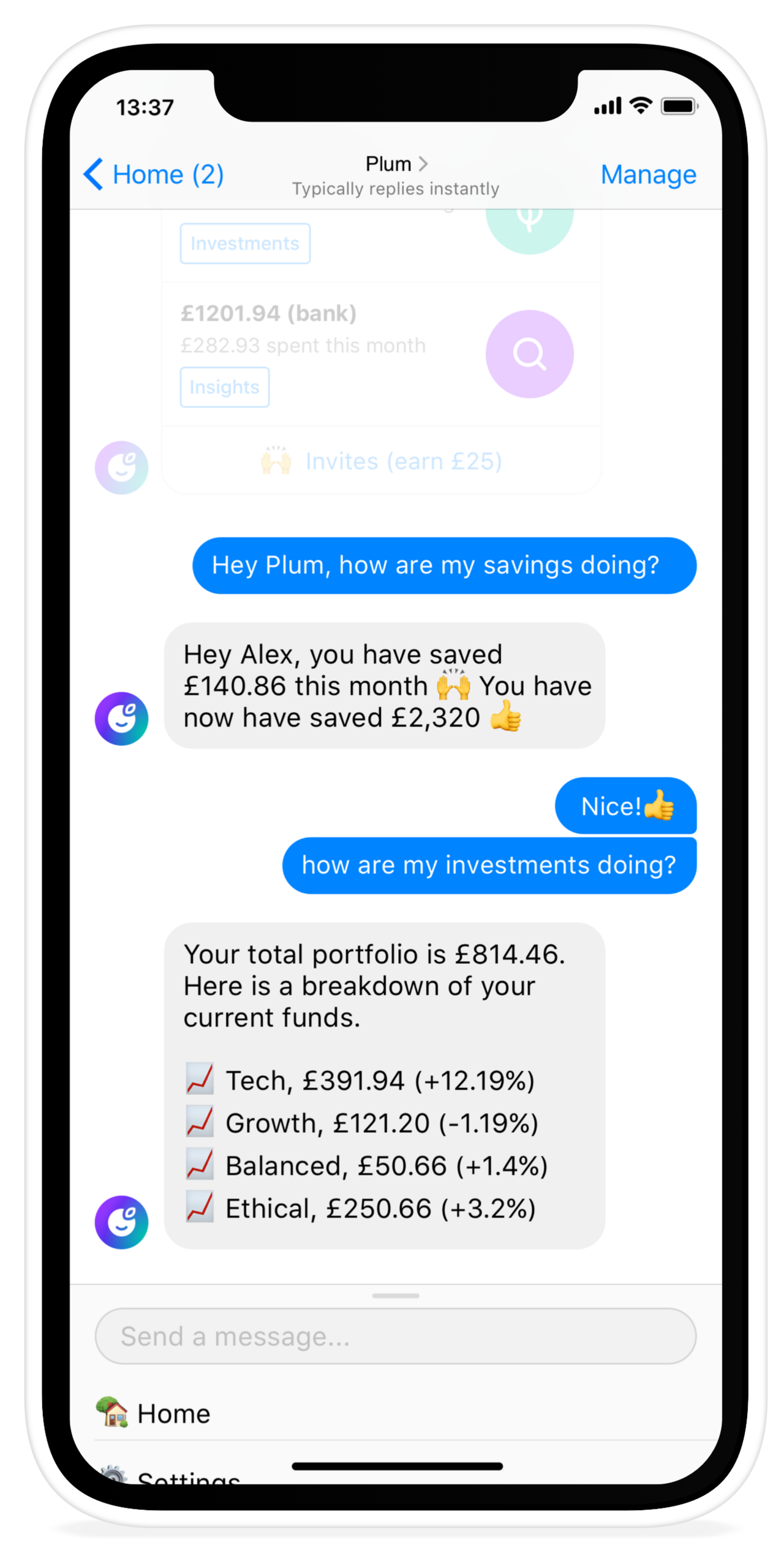

Victor Trokoudes founder of financial chatbot Plum, external told BBC News some of their users were likely to stress test chatbots during the first few days of use, sometimes involving swearing,

"We realised that early on users will test how real Plum would be and what happens if I say something nasty to it," he said.

But the proportion of "nasty" messages then dropped, from about 5% in the first week of use to 0.1% after the first month.

"We collected all 'negative' phrases that people have said and evolved Plum to respond to them in a funny way," Mr Trokoudes told BBC News.

Plum is now programmed to respond: "I might be a robot but I have digital feelings. Please don't swear."

"That really had a positive effect and people would feel Plum was actually a smart entity on the other side of the chat," Mr Trokoudes added.