Matter of fact-checkers: Is Facebook winning the fake news war?

- Published

Facebook launched its fact-checking programme one month after US President Donald Trump's election in 2016.

For the people contracted by Facebook to clamp down on fake news and misinformation, doubt hangs over them every day. Is it working?

"Are we changing minds?" wondered one fact-checker, based in Latin America, speaking to the BBC.

"Is it having an impact? Is our work being read? I don't think it is hard to keep track of this. But it's not a priority for Facebook.

"We want to understand better what we are doing, but we aren't able to."

More than two years on from its inception, and on International Fact-Checking Day, multiple sources within agencies working on Facebook's global fact-checking initiative have told the BBC they feel underutilised, uninformed and often ineffective.

One editor described how their group would stop working when it neared its payment cap - a maximum number of fact-checks in a single month for which Facebook is willing to pay.

Others told how they felt Facebook was not listening to their feedback on how to improve the tool it provides to sift through content flagged as "fake news".

"I think we view the partnership as important," one editor said.

"But there's only so much that can be done without input from both sides."

As the US prepares to hurl itself into another gruelling presidential campaign, experts feel Facebook remains ill-equipped to fend off fake news.

Despite this, Facebook said it was pleased with progress made so far - pointing to external research that suggested the amount of fake news shared on its platform was decreasing, external.

In Trump's wake

Facebook requires its fact-checkers to sign non-disclosure agreements that prevent them talking publicly about some aspects of their work.

In order to not identify the source of information, the BBC has chosen to make its sources anonymous and avoid using certain specific numbers that may be unique to individual contracts.

Facebook launched its fact-checking programme in December 2016, just over a month after the election of Donald Trump as US president.

It was a victory some felt was helped by misinformation spread on social media, chiefly Facebook.

At the time, founder and chief executive Mark Zuckerberg said such a notion was "crazy" - though he later told a congressional committee that he regretted using the term.

Facebook now has 43 fact-checking organisations working with them across the world, covering 24 different languages.

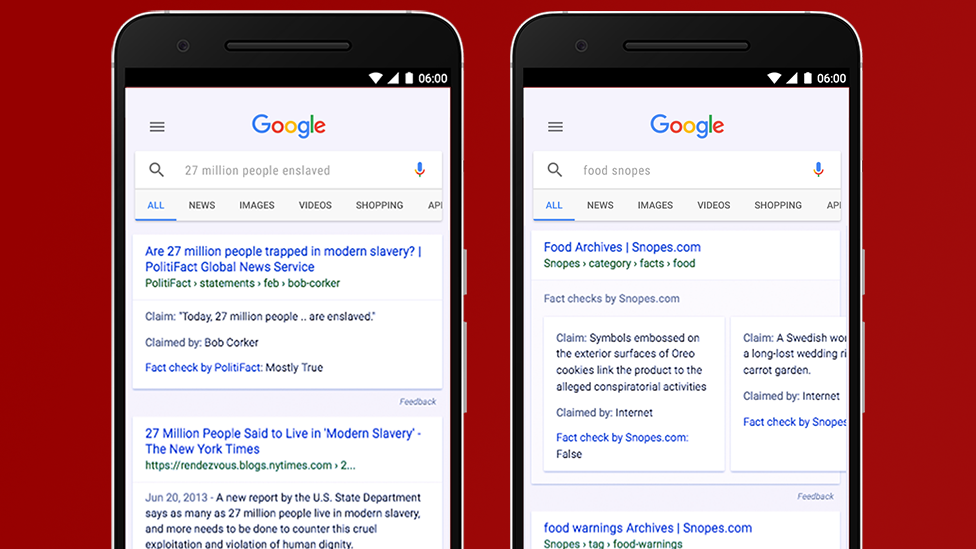

The groups use a tool built by Facebook to sift through content that has been flagged as potentially false or misleading.

The flagging is done either by Facebook's algorithm or by human users reporting articles they believe may be inaccurate.

The fact-checkers will then research whatever claims are made, eventually producing their own "explanatory article".

If content is deemed misleading or outright false, users who posted it are meant to receive a notification, and the post is shown less prominently as a result.

For those trying to post the material after it has been checked, a pop-up message advises them about the fact-checkers' concerns.

For each explanatory article, Facebook pays a fixed fee, which, in the US, is understood to be around $800 (£600), according to contracts described to the BBC.

Fact-checkers in the developing world appear to be paid around a quarter of that amount.

Downed tools

What has not been previously reported, however, is that at the beginning of 2019, Facebook put in place a payment cap: a monthly limit of explanatory articles after which fact-checking agencies would not be paid for their work.

Typically, the limit is 40 articles per month per agency - even if the group works across several countries.

It's a fraction of the total job at hand - a screenshot of Facebook's tool, taken last week by a fact-checker in one Latin American country, showed 491 articles in the queue waiting to be checked.

Facebook has confirmed what it called an "incentive-based structure" for payment, one which increased during busy times, such as an election.

The company said the limit was created in line with the capabilities of the fact-checking firms, and that the limits were rarely exceeded.

However, some groups told the BBC they would "never have a problem" reaching the limit.

One editor said their staff would simply stop submitting their reviews to Facebook's system once the cap was nearing, so as not to be fact-checking for free.

"We're still working on stuff, but we'll just hold it until next month," they said.

Discontent

Snopes no longer works with Facebook

Earlier this year, US-based fact-checking agency Snopes said it was ending its work with Facebook.

"We want to determine with certainty that our efforts to aid any particular platform are a net positive for our online community, publication, and staff," Snopes said in a statement at the time.

Another major partner, the Associated Press, told the BBC it was still negotiating its new contract with Facebook. However, the AP does not appear to have done any fact-checking directly on Facebook since the end of 2018.

Snopes' statement echoed the concerns of those who were still part of the programme.

"We don't know how many people have been reached," one editor said.

"I feel we are missing very important information about who is publishing fake news inside of Facebook consistently."

'Room to improve'

A Facebook spokeswoman told the BBC the firm was working on increasing the quality of its fact-checking tools - and being more open about data.

"We know that there's always room for us to improve," the company said.

"So we'll continue to have conversations with partners about how we can be more effective and transparent about our efforts."

The firm said it has recently started sending quarterly reports to agencies.

These contain snapshots of their performance, such as what portion of users decided not to post material after being warned it was unreliable. One document seen by the BBC suggests, in one country at least, it's more than half.

There are also concerns about fake news spreading on Facebook-owned messaging platform WhatsApp

But, the problem is evolving rapidly.

As well as Facebook's main network, its messaging app WhatsApp has been at the centre of a number of brutal attacks, apparently motivated by fake news shared in private groups.

While there are efforts from fact-checking organisations to debunk dangerous rumours within the likes of WhatsApp, Facebook has yet to provide a tool - though it is experimenting with some ideas to help users report concerns.

Opening up

These challenges did not arrive unexpectedly to those who have studied the effects of misinformation closely.

Claire Wardle, chair of First Draft, an organisation that supports efforts to combat misinformation online, said the only way for Facebook to truly solve its issues was to give outsiders greater access to its technology.

"From the very beginning, my frustration with Facebook's programme is that it's not an open system," she told the BBC.

"Having a closed system that just Facebook owns, with Facebook paying fact-checkers to do this work just for Facebook, I do not think is the kind of solution that we want."

Instead, she suggested, Facebook must explore the possibility of crowdsourcing fact-checks from a much wider source of expertise - something Mark Zuckerberg appears to be considering, external. Such an approach would of course bring new problems and attempts to game the system.

So, for now at least, and despite their serious reservations, most of those battling misinformation on Facebook have pledged to continue on with what's becoming an increasingly Sisyphean ordeal.

- Published2 February 2019

- Published11 January 2019

- Published11 March 2019

- Published7 April 2017