MPs call for halt to police's use of live facial recognition

- Published

- comments

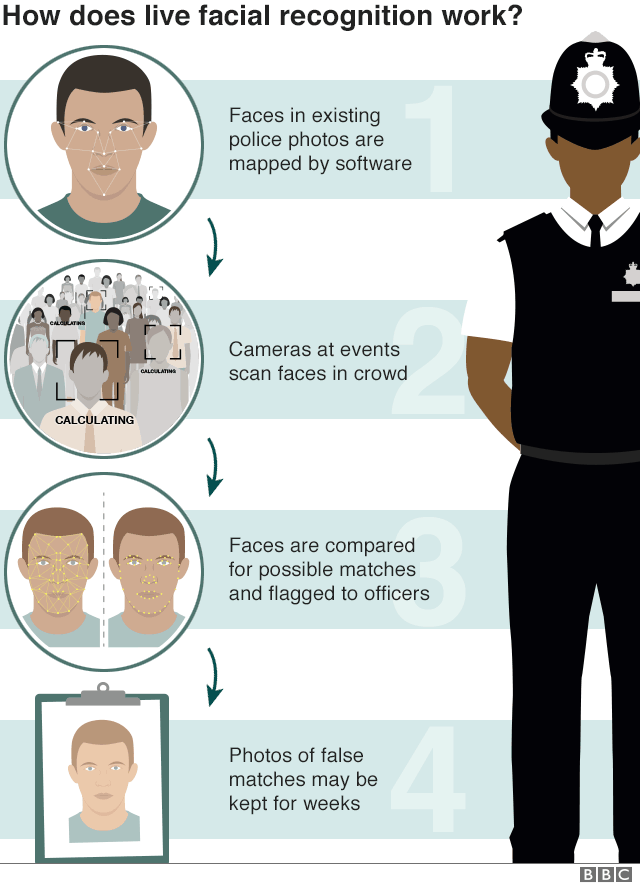

The police and other authorities should suspend use of automatic facial recognition technologies, according to an influential group of MPs.

The House of Commons Science and Technology committee added there should be no further trials of the tech until relevant regulations were in place.

It raised accuracy and bias concerns.

And it warned that police forces were failing to edit a database of custody images to remove pictures of unconvicted individuals.

"It is unclear whether police forces are unaware of the requirement to review custody images every six years, or if they are simply 'struggling to comply'," the committee's report said, external.

"What is clear, however, is that they have not been afforded any earmarked resources to assist with the manual review and weeding process."

As a consequence, the MPs warned, innocent people's pictures might illegally be included in facial recognition "watch lists" that are used in public spaces by the police to stop and even arrest suspects.

The committee noted that it had flagged similar concerns a year ago but had seen little progress from the Home Office since. By contrast, it said, the Scottish Executive had commissioned an independent review into how biometric data should be used and stored.

The report comes a week after the Home Secretary Sajid Javid said he backed police trials of facial recognition systems, while acknowledging that longer-term use would require legislation.

Earlier this month, the Information Commissioner Elizabeth Denham, external said the police's use of live facial recognition tech raised "significant privacy and data protection issues" and might even breach data protection laws.

The civil rights group Liberty has also supported a legal challenge to South Wales Police's use of the technology in a case that has yet to be ruled on by a judge at Cardiff High Court.

And the Surveillance Camera Commissioner Tony Porter has criticised, external trials by London's Metropolitan Police saying: "We are heading towards a dystopian society where people aren't trusted, where they are logged and their data signatures are tracked".

The Home Office, however, has noted that there is public support for live facial recognition to identify potential terrorists and people wanted for serious violent crimes.

"The government believes that there is a legal framework for the use of live facial recognition technology, although that is being challenged in the courts and we would not want to pre-empt the outcome of this case," said a spokesman.

"However, we support an open debate about this, including how we can reduce the privacy impact on the public.

It also recently revealed that Kent and West Midlands' forces plan to test facial recognition software to retrospectively analyse CCTV recordings to spot missing and vulnerable people.

"The public would expect the police to consider all new technologies that could make them safer," a spokesman for the National Police Chiefs' council told the BBC.

"Any wider roll out of this technology must be based on evidence showing it to be effective with sufficient safeguards and oversight."

Racial and gender bias

As part of its report, the committee highlighted earlier work that had raised concerns of bias.

It referred specifically to a government advisory group that had warned in February that facial recognition systems could produce inaccurate results if they had not been trained on a diverse enough range of data.

How one man was fined £90 after objecting to being filmed by police

"If certain types of faces - for example, black, Asian and ethnic minority faces or female faces - are under-represented in live facial recognition training datasets, then this bias will feed forward into the use of the technology by human operators," the ethics group had cautioned, external.

While police officers were supposed to double-check matches made by the system by other means before taking action, the group also warned that there was a risk that they might "start to defer to the algorithm's decision" without doing so.

As such, the committee said that ministers needed to set clearer limits on the tech's use.

"We call on the government to issue a moratorium on the current use of facial recognition technology and no further trials should take place until a legislative framework has been introduced and guidance on trial protocols, and an oversight and evaluation system, has been established," it concluded.

One think tank chief welcomed the recommendation but said the real problems were not ones of bias or accuracy.

"These are a distraction from the wider question of whether we want to have this technology at all," Areeq Chowdhury from Future Advocacy told the BBC.

"Before any further deployment of facial recognition by the police, we need to have a public conversation about whether we are happy for our faces to become a tool of national security."

Custody images

The committee also flagged issues with the way custody images were being stored in the Police National Database.

As of February 2018, the PND had 12.5 million images available to facial recognition searches, external.

People who have been acquitted or had charges against them dropped can apply to have their images removed.

But the MPs noted that despite guidance that images of unconvicted individuals should be removed by hand after six years, this was not being done.

"The government should strengthen the requirement for such a manual process to delete custody images and introduce clearer and stronger guidance on the process," the committee's report said.

"In the long-term the government should invest in automatic deletion software as previously promised."

The privacy campaign Big Brother Watch supported the call.

"This practice was ruled unlawful by the High Court in 2012 - it is shameful that the government has failed to act," said Griff Ferris, the group's legal and policy officer.