Facebook puts $10m into effort to spot deep fake videos

- Published

Deep fake systems were used to put words into the mouth of former US President Barack Obama

A $10m (£8.1m) fund has been set up to find better ways to detect so-called deep fake videos.

Facebook, Microsoft and several UK and US universities are putting up the cash for the wide-ranging research project.

Deep fake clips use AI software to make people - often politicians or celebrities - say or do things they never did or said.

Many fear such videos will be used to sow distrust or manipulate opinion.

The cash will help create detection systems as well as a "data set" of fakes that the tools can be tested against.

"The goal of the challenge is to produce technology that everyone can use to better detect when AI has been used to alter a video in order to mislead the viewer," wrote Mike Schroepfer, chief technical officer at Facebook, in a blog outlining the project., external

One of the key elements will be to create the data set used to calibrate and rate the different fake-spotting systems.

Mr Schroepfer said data sets for other AI-based systems including those that can identify images or spoken language, had fuelled a "renaissance" in those technologies and prompted wide innovation.

The data set will be generated using paid actors to perform scenes which can then be used to create deep fake videos that the different detection systems will attempt to spot.

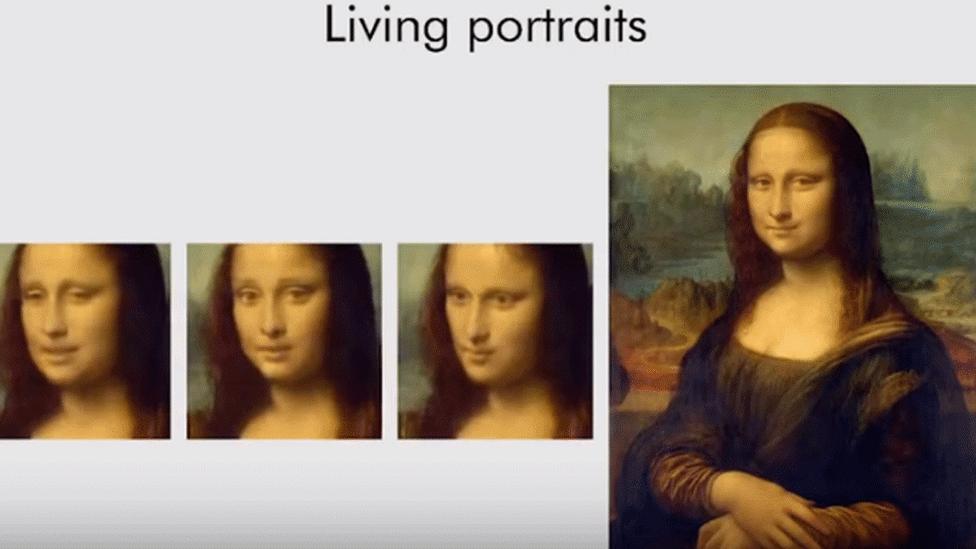

Deep fake technology was used to bring Mona Lisa to life

No Facebook data will be used to generate this freely available bank of images, audio clips and videos, said Mr Schroepfer.

He added that the database of deep fakes will be made available in December.

The cash will also be used to fund work at many universities, including Cornell, Oxford and UC Berkeley,

The technology to create convincing fakes has spread widely since it first appeared in early 2018. Now it has become much easier and quicker to create the videos, with many worrying the convincing clips will feature in propaganda campaigns.

One commentator said the tools to spot the fakes may not solve all the problems the technology presents.

"It's a cat-and-mouse game," Siddharth Garg, an assistant professor of computer engineering at New York University told Reuters., external

"If I design a detection for deep fakes, I'm giving the attacker a new discriminator to test against."

- Published22 August 2019

- Published12 August 2019

- Published4 September 2019