Instagram trains AI to detect offensive captions

- Published

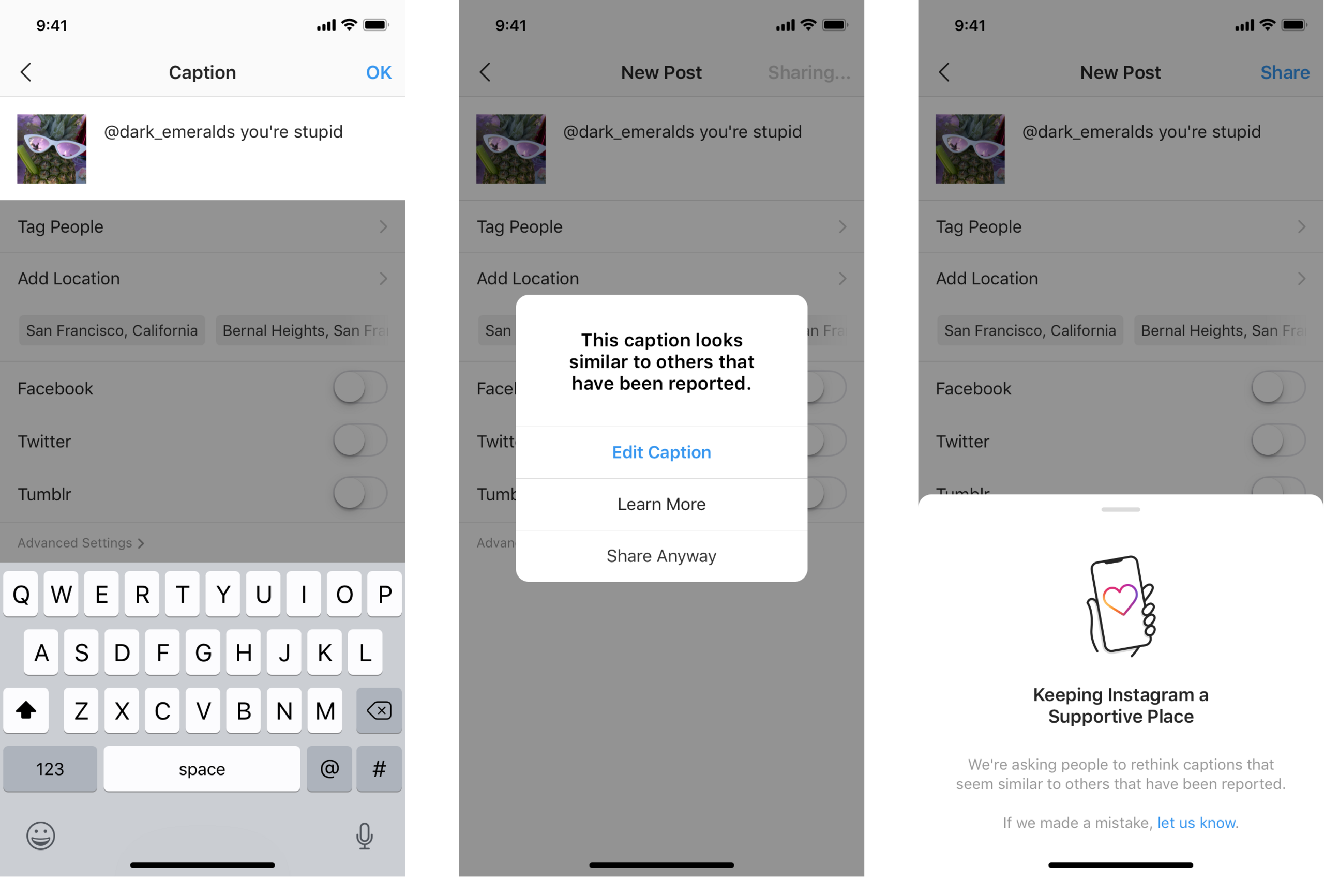

Instagram is to warn users when their captions on a photo or video could be considered offensive.

The Facebook-owned company says it has trained an AI system to detect offensive captions.

The idea is to give users "a chance to pause and reconsider their words".

Instagram announced the feature in a blog, external on Monday, saying it would be rolled out immediately to some countries.

The tool is designed to help combat online bullying, which has become a major problem for platforms such as Instagram, YouTube, and Facebook.

Instagram was ranked as the worst online platform in a cyber-bullying study in July 2017.

If a user with access to the tool types an offensive caption on Instagram, they will receive a prompt informing them it is similar to others reported for bullying.

Users will then be given the option to edit their caption before it is published.

"In addition to limiting the reach of bullying, this warning helps educate people on what we don't allow on Instagram and when an account may be at risk of breaking our rules," Instagram wrote in the post.

Earlier this year, Instagram launched a similar feature that notified people when their comments on other people's Instagram posts could be considered offensive.

"Results have been promising and we've found that these types of nudges can encourage people to reconsider their words when given a chance," Instagram wrote.

Chris Stokel-Walker, internet culture writer and author of the book YouTubers, told the BBC News the feature was part of a broader move by Instagram to be more aware of the wellbeing of its users.

"From cracking down on promoting images of self-harm, to hiding 'likes' so people outwardly are less likely to equate their self-worth with how many people press 'like' on their photos, the app has been making moves to try and roll back some of the more damaging changes it's had on society," he said.

- Published19 July 2017

- Published8 July 2019