Coronavirus: Call for apps to get fake Covid-19 news button

- Published

- comments

Social networks need a dedicated button to flag up bogus coronavirus-related posts, an advocacy group has said.

The Center for Countering Digital Hate (CCDH) said the apps had "missed a trick" in combating the problem.

The call coincides with a study that indicates 46% of internet-using adults in the UK saw false or misleading information about the virus in the first week of the country's lockdown.

Ofcom said the figure rose to 58% among 18-to-24-year-olds.

The communications watchdog said the most common piece of false advice seen during the week beginning 23 March was the claim that drinking more water could flush out an infection.

Incorrect claims that Covid-19 could be alleviated by gargling salt water or avoiding cold food and drink were also widely seen.

The watchdog intends to survey 2,000 people each week to help track the issue.

On Wednesday, the Digital Secretary, Oliver Dowden, had a virtual meeting with Facebook, Twitter and YouTube's owner, Google.

During the call the firms committed themselves to:

developing further technical solutions to combat misinformation and disinformation on platforms

weekly reporting on related misinformation trends

improving out-of-hours coverage and response rates to harmful misinformation

providing messaging to users about how to identify and respond to misinformation

'Barrier to action'

Tech firms have stepped up their efforts to tackle fake reports in recent days.

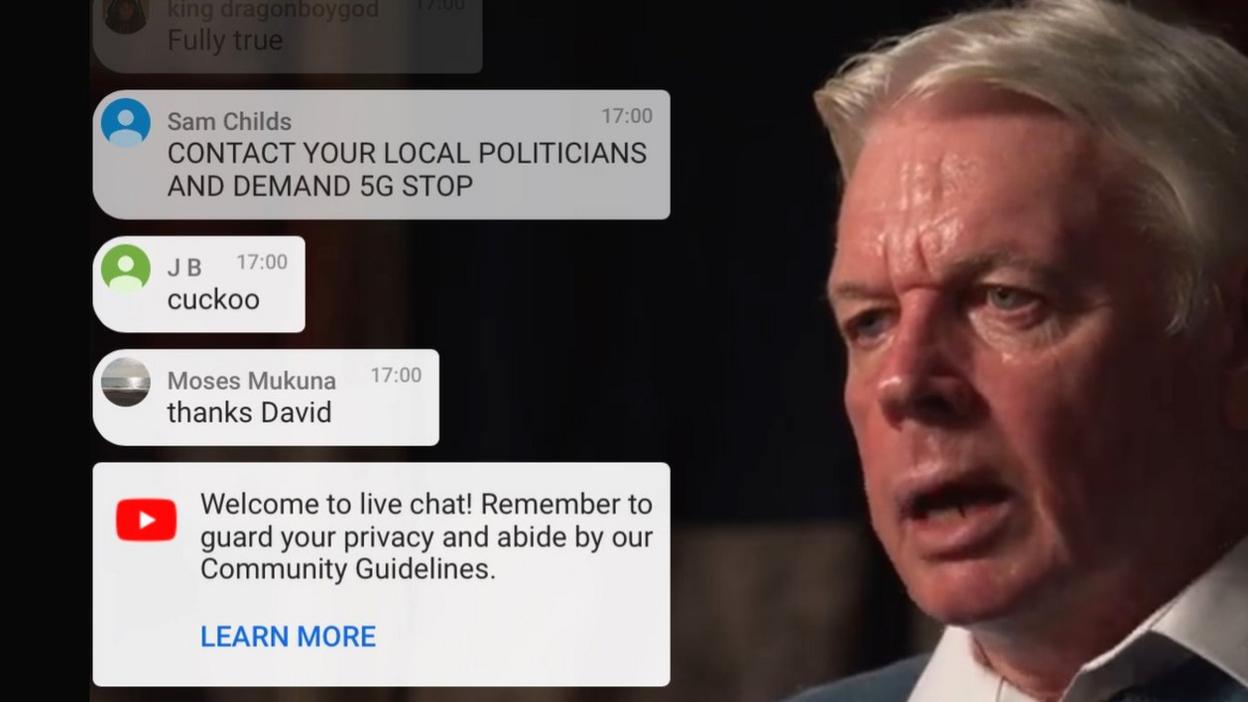

This includes WhatsApp limiting the number of chats users can send popular messages to at one time and YouTube banning videos that make false claims about 5G being linked to Covid-19.

But CCDH says the public needs an easier way to flag misinformation about the disease than at present.

The lack of such a dedicated button creates a "barrier to action", the group's chief executive, Imran Ahmed, told the BBC, discouraging users from hunting through the options to report offending posts.

At present:

Twitter says two options are suitable for reporting coronavirus misinformation - "suspicious or spam" and "abusive or harmful". But clicking on one asks the user to narrow it down to more specific categories, none of which match misinformation or conspiracy theories

YouTube suggests using its "harmful dangerous acts" category, which has options for drug abuse and self-injury but not misinformation

TikTok has a spam category, but no clear category for misinformation

Facebook does have a "false news" category for reports, but Mr Ahmed says that is "quite different from misinformation". It is also a politically charged term, he says, and "no-one knows what it means any more"

The CCDH chief is also concerned that users are often encouraged to block or mute the reported accounts.

That means "you don't see the reality, which is that they might delete a post, but very rarely delete accounts," he said.

MP Damian Collins recently set up fact-checking service Infotagion to combat misinformation about the pandemic.

A SIMPLE GUIDE: How do I protect myself?

AVOIDING CONTACT: The rules on self-isolation and exercise

LOOK-UP TOOL: Check cases in your area

MAPS AND CHARTS: Visual guide to the outbreak

VIDEO: The 20-second hand wash

He has called for the deliberate spreading of misinformation to be made an offence - and says Facebook and other social networks should take action against the administrators of groups containing the posts.

"[Tech firms] act on it if it poses imminent physical harm, but if it's other information - like conspiracy theories - then that doesn't meet their test as to if an item should be removed," Mr Collins said, before YouTube toughened its policy relating to 5G.

"There's not necessarily a blanket ban on misinformation about Covid-19."

Racist posts

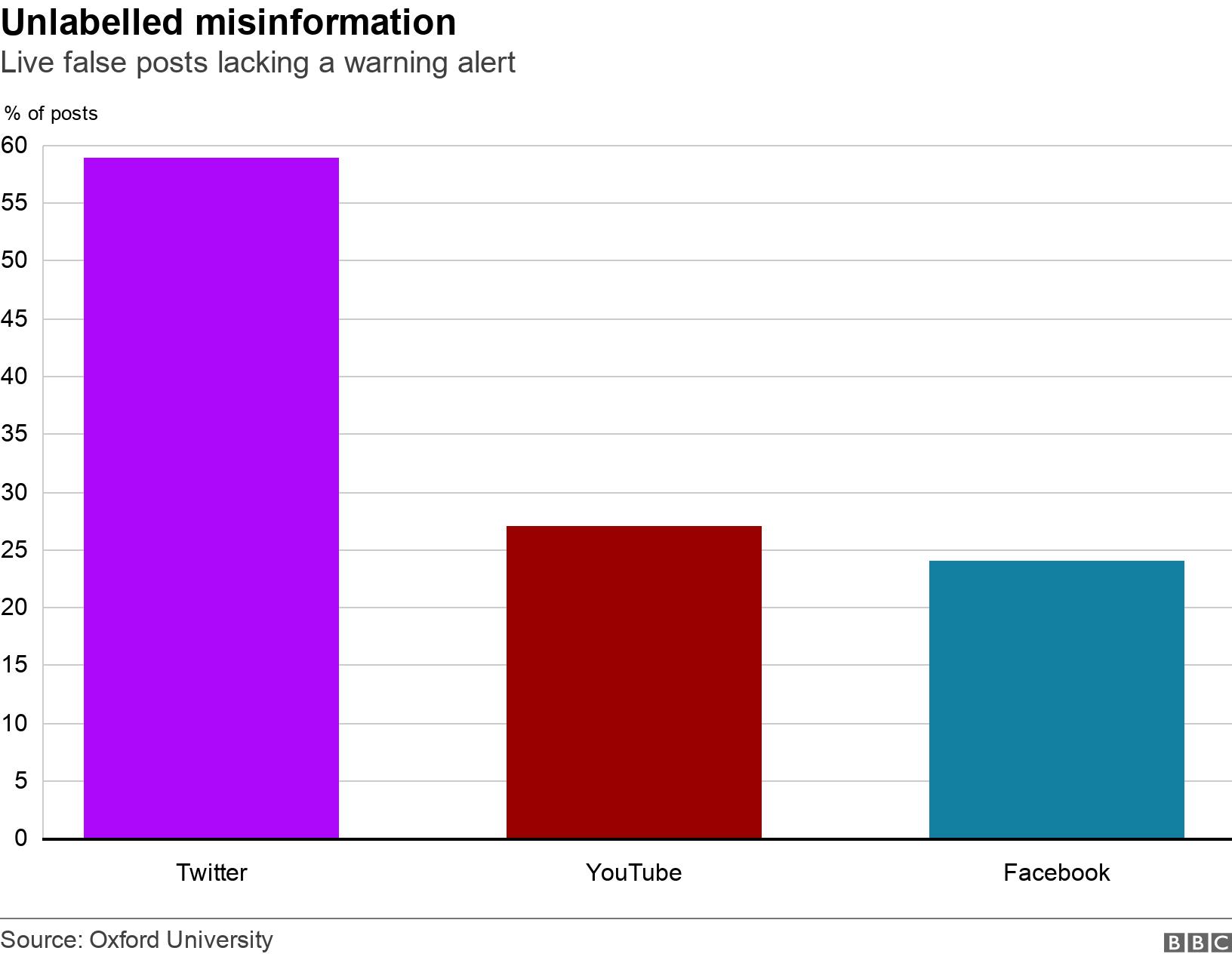

Oxford University's Reuters Institute for the Study of Journalism published its own research into the spread of online misinformation about coronavirus, earlier in the week.

It analysed 225 posts, which had been classed as false or misleading by professional fact-checkers over the first three months of the year.

It said the most common kind of false claims were about how public authorities were responding to the crisis.

The second-most frequent kind concerned the spread of the disease among communities, including posts that blamed certain ethnic groups.

The study added that the three core social networks - Facebook, YouTube and Twitter - had all removed, labelled or taken other action against most of the posts flagged to them by independent fact-checkers.

But it said there was "significant variation" among them in their treatment of the ones left online.

None of the social networks contacted by the BBC disclosed plans to introduce a specific coronavirus reporting tool.

But they did claim to have taken substantial steps to combat problematic coronavirus posts.

Twitter says it catches half the tweets that break its rules before anyone ever reports them - but has asked people to continue doing so.

TikTok said it was focusing on providing information from authoritative sources, and that its guidelines explicitly banned misinformation that could harm people.

Facebook said it was removing content about the virus that had clearly been debunked by an authoritative source but was prioritising posts that could cause direct harm to people.

- Published7 April 2020

- Published7 April 2020