Coronavirus: Harmful lies spread easily due to lack of UK law

- Published

Misleading and harmful online content about Covid-19 has spread "virulently" because the UK still lacks a law to regulate social media, an influential group of MPs has said.

The Digital, Culture, Media and Sport Committee urged the government to publish a draft copy of promised legislation by the autumn.

It follows suggestions the Online Harms Bill might not be in force until 2024.

The group's chairman said tech firms could not be left to self-regulate.

"We still haven't seen correct legislative architecture put in place, and we are still relying on social media companies' consciences," said Julian Knight.

"This just is not good enough. Our legislation is not in any way fit for purpose, and we're still waiting. What I've seen so far has just been quite a lot of delay."

Google and Facebook have said they have invested in measures to tackle posts that breach their guidelines.

But the report has already been welcomed by the children's charity NSPCC.

"The committee is right to be concerned about the pace of legislation and whether the regulator will have the teeth it needs," said Andy Burrows, its head of child safety online policy.

'Duty of care'

The committee report specifically calls for recommendations set out in the Online Harms Paper published in April of last year to be made into law.

The paper suggested a legal "duty of care" should be created to force tech companies to protect their users, and that a regulatory body be set up to enforce the law.

The government has said legislation will be introduced "as soon as possible".

But last month, a House of Lords committee that looked into the same issue reported that the law might not come into effect until three or four years' time.

In its own report, the DCMS committee said it was concerned that the delayed legislation would not address the harms caused by misinformation and disinformation spread about fake coronavirus cures, 5G technology and other conspiracy theories related to the pandemic.

It also claimed social media firms' advertising-focused business models had encouraged the spread of misinformation and allowed "bad actors" to make money from emotional content, regardless of the truth.

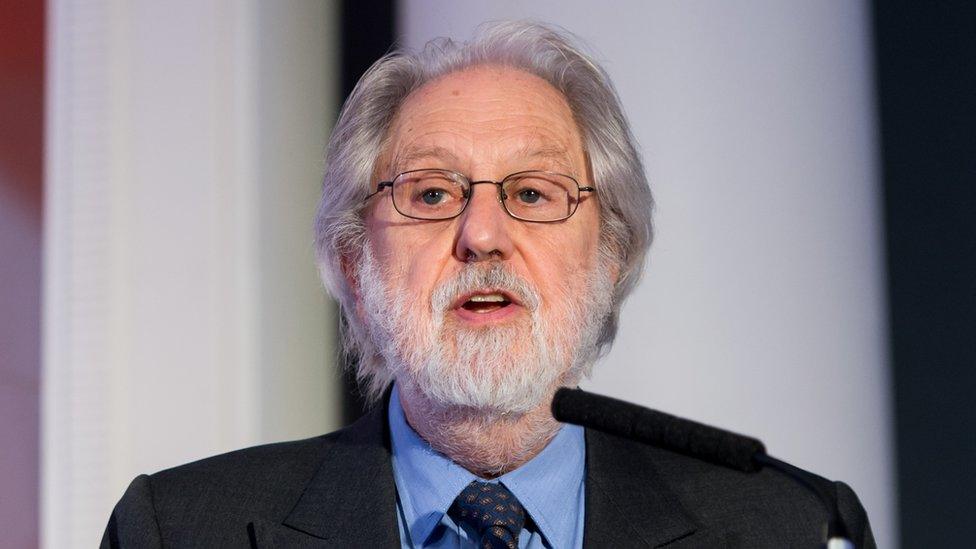

Julian Knight took charge of the DCMS select committee earlier this year

"As a result the public is reliant on the good will of tech companies or the 'bad press' they attract to compel them to act," the report said.

Speaking to the BBC, Mr Knight said the major players - Facebook, Twitter and Google owner YouTube - now had to be dragged "kicking and screaming" to do more to regulate their platforms.

"We need social media companies to actually be ahead of the game and we need government as well to be very clear to them," he added.

"This is not a freedom of speech issue. This is a public health issue."

Facebook has responded: "We don't allow harmful misinformation and have removed hundreds of thousands of posts including false cures, claims that coronavirus doesn't exist, that it's caused by 5G or that social distancing is ineffective.

"In addition to what we remove, we've placed warning labels on around 90 million pieces of content related to Covid-19 on Facebook during March and April."

YouTube said: "We have clear policies around promoting misinformation on YouTube, and updated our policies to ensure that content on the platform aligns with NHS and WHO [World Health Organization] guidance.

"When videos are flagged to us, we work quickly to review them in line with these policies and take appropriate action."

Twitter told the BBC its top priority was "protecting the health of the public conversation - this means surfacing authoritative public health information and the highest quality and most relevant content and context first".

Anti-vaccine conspiracies

The report also lists some of the main groups responsible for spreading online misinformation.

They include:

state actors, including Russia, China and Iran

the Islamic State group

far-right groups in the US and the UK

scammers

For various reasons, individuals had also contributed by spreading false information and ideas about fake cures to others online during the pandemic, the MPs said.

Mr Knight also expressed concern that anti-vaccine conspiracy theories might frustrate efforts to tackle Covid-19 once a suitable preventative treatment became available.

Social media companies, he added, "need to ensure that they aren't just neutral in this - they absolutely must take an active part in ensuring that our society, our neighbours, our friends and our loved ones are safe".

The report also criticised the government for setting up its own Counter Disinformation Unit in March.

It suggested this was late, since fake news about coronavirus had begun spreading online in January, adding that in any case the unit had largely duplicated the work of other organisations.

- Published1 July 2020

- Published29 June 2020

- Published14 July 2020