Inside the controversial US gunshot-detection firm

- Published

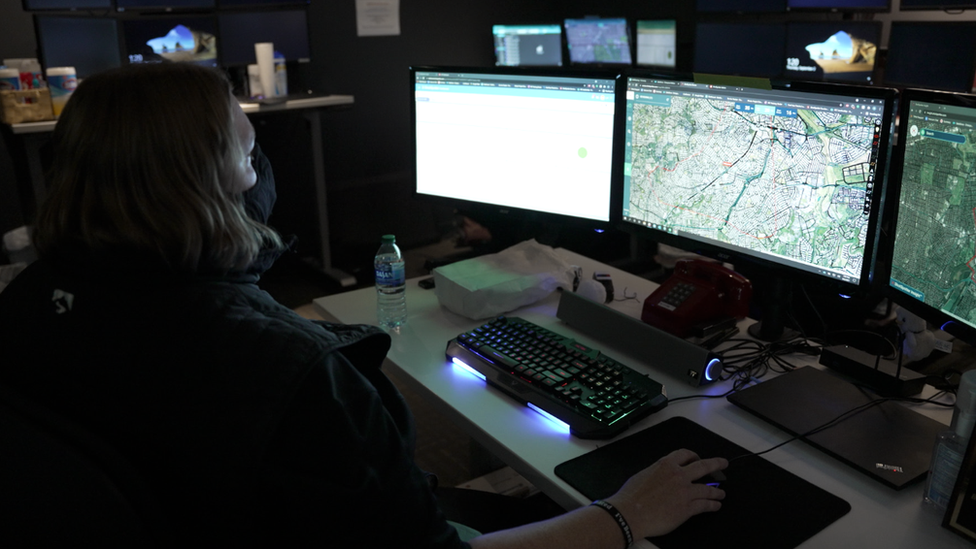

A ShotSpotter analyst at a workstation

ShotSpotter's incident-review room is like any other call centre.

Analysts wearing headsets sit by computer screens, listening intently.

Yet the people working here have an extraordinary responsibility.

They make the final decision on whether a computer algorithm has correctly identified a gunshot - and whether to dispatch the police.

Making the wrong call has serious consequences.

ShotSpotter has garnered much negative press over the last year. Allegations range from its tech not being accurate, to claims that ShotSpotter is fuelling discrimination in the police.

In the wake of those negative news stories, the company gave BBC News access to its national incident-review centre.

ShotSpotter is trying to solve a genuine problem.

"What makes the system so compelling, we believe, is a full 80-95% of gunfire goes unreported," chief executive Ralph Clark says.

ShotSpotter chief executive Ralph Clark

People don't report gunshots for several reasons - they may be unsure what they have heard, think someone else will call 911 or simply lack trust in the police.

So ShotSpotter's founders had an idea. What if they could bypass the 911 process altogether?

They came up with a system.

Microphones are fixed to structures around a neighbourhood. When a loud bang is detected, a computer analyses the sound and classifies it as either a gunshot or something else. A human analyst then steps in to review the decision.

In the incident-review room, former teacher Ginger Ammon allows me to sit with her as she analyses these decisions in real time.

Every time the algorithm flags a potential shot, it makes a "ping" sound.

Ms Ammon first listens to the recording herself and then studies the waveform it produces on her computer screen.

ShotSpotter analyst Ginger Ammon

"We're looking to see how many sensors picked it up and if the sensors made a directional pattern, because, in theory, a gunshot can only travel in one direction," she says.

Once confident a shot has been fired, Ms Ammon clicks a button that dispatches police officers to the scene.

It all happens in under 60 seconds.

"It kind of feels like you're playing a computer game," I say.

"That is a comment that we get frequently," she replies.

ShotSpotter's successes

There are clear examples of ShotSpotter working.

In April 2017, black supremacist Kori Ali Muhammad went on a murderous rampage in Fresno, California.

Trying to kill as many white men as possible, he walked around a suburban neighbourhood, picking off targets.

The police were receiving 911 calls - but they were delayed and unspecific.

Yet ShotSpotter was able to indicate to officers Muhammad's route.

After three minutes - and three murders - Muhammad was apprehended.

Fresno police believe without ShotStopper, he would have killed more.

"ShotSpotter gave us the path he took," says Lt Bill Dooley.

The company refuses to publicly reveal what its microphones look like - but this is believed to be one

The company has been hugely successful at convincing police forces to adopt its technology.

Its microphones are in more than 100 cities across America - and for years, the technology was considered uncontroversial.

That all changed with George Floyd's murder, as people became more interested in the technology so many police forces were using.

ShotSpotter is too expensive for the police to roll out across a city.

Instead, microphones are often placed in inner-city areas - areas with higher black populations.

So if the technology isn't as accurate as claimed, it could be having a disproportionate impact on those communities.

Suddenly, ShotSpotter was in the spotlight.

Accuracy Concerns

ShotSpotter claims to be 97% accurate. That would mean that the police can be pretty confident that when a ShotSpotter alert happens - they are almost certainly responding to a gunshot.

But that claim is exactly that, a claim. It's hard to see how ShotSpotter knows it's that accurate - at least not with the public information it has released.

And if it isn't, it could have wide consequences for American justice.

The first problem with that accuracy claim is that it's often difficult to tell, on the ground, whether a shot has been fired.

When Chicago's Inspector General investigated, they found, external that in only 9% of ShotSpotter alerts was there any physical evidence of a gunshot.

Chicago Deputy Inspector General for Public Safety Deborah Witzburgh

"It's a low number," the city's Deputy Inspector General for Public Safety, Deborah Witzburgh, says.

That means in 91% of police responses to ShotSpotter alerts, it's difficult to say definitively that a gun was fired. That's not to say there was no gunshot, but hard to prove that there was.

Gunfire sounds very similar to a firecracker, or car backfiring.

So how is ShotSpotter so confident that it's nearly 100% accurate? It's something I ask Mr Clark.

"We rely on ground truth from agencies to tell us when we miss, when we miss detections or when we miss-classify," he tells me.

But critics say that methodology has a fundamental flaw. If the police are unsure whether a gunshot has been fired, they are not going to tell the company it was wrong.

In other words, say critics, the company has been counting "don't knows", '"maybes", and "probablys" as "got it rights".

Chicago defence lawyer Brendan Max says the company's accuracy claims are "marketing nonsense".

"Customer feedback is used to decide whether people like Pepsi or Coke better," he says.

"It's not designed to determine whether a scientific method works."

Conor Healy, who analyses security systems for video-surveillance research group IPVM, is also deeply sceptical about the 97% accuracy figure.

"Putting the onus on the police to report every false positive means you expect them to report on stuff, when nothing's happened… which they're unlikely to do," Mr Healy says.

"It's fair to assume that if they [ShotSpotter] have solid testing data to back up their claims, they have every incentive to release that data."

Gun crime on the rise

Back in Fresno I join the police on a night-time ride-along with police officer Nate Palomino.

Fresno has some of California's worst gun crime, and just like many other cities in America it's been getting worse, external in the last two years.

Sure enough, a ShotSpotter alert comes through. However, when we reach the scene, no casings are found and there's no physical evidence of a gunshot.

Officer Palomino tells me the audio recording sounds like a gunshot - and it seems more than possible it was - but it's difficult to prove.

He says that scenario is typical.

Officer Nate Palomino looking for gun casings

ShotSpotter's accuracy should be beyond doubt.

It has been used in courts up and down the country as evidence for both defence and prosecution.

The worry is that if it isn't as accurate as is claimed, ShotSpotter is sending officers into situations wrongly expecting gunfire.

Alyxander Godwin, who has been campaigning to get rid of ShotSpotter in Chicago, summarises the concern.

"The police are expecting these situations to be hostile," she says.

"They expect there to be a gun, and because of where this is deployed, they're expecting a black or brown person to be holding a gun."

But ShotSpotter says there is no data to back this theory up.

"What you'd be describing is a situation where officers get to a scene and they're basically shooting unarmed people," Mr Clark says.

"It's just not in the data - it's speculation."

Yet he seems to also accept that the company's own accuracy methodology has its limitations.

"It might be a fair criticism to say, 'Hey, look, you're not getting all the feedback that you might possibly get,'" Mr Clark says.

"That might be a fair criticism."

Mr Max, the Chicago lawyer, says ShotSpotter reports should not be allowed as evidence in court until the company can better back up its claims.

Chicago defence lawyer Brendan Max

"In the last four or five months, I'm aware of dozens of Chicagoans who have been arrested based on ShotSpotter evidence," he says.

"I'm sure that has played out in cities across the country."

He also says the company should open its systems up to better review and analysis.

For example, who is independently reviewing the quality of the analysts? And how often does the algorithm disagree with the human analyst?

Certainly, from my time at the ShotSpotter incident review centre, it's common for analysts to disagree with the computer classification.

"It's just filtering out what we see," Ms Ammon says.

"But I honestly don't even look at it [the classification], I'm so busy looking at the sensor patterns."

It's an interesting admission. Sometimes, the technology is viewed as all seeing, all knowing - the computer masterfully detecting a gunshot.

In practice, the analysts have a far greater role than I expected.

Lawyers such as Brendan Max are interested in trying to establish more information about how the technology works in court.

Saving lives

ShotSpotter has had a lot of criticism over the past year - not all of it fair.

And much of the coverage casually skips over the fact that police forces often give glowing reviews of the technology's effectiveness.

The company is keen to highlight cases where ShotSpotter has alerted police to gunshot victims, for example, saving lives.

In several cities across America, activists are trying to persuade cities to pull ShotSpotter contracts.

But in other places, ShotSpotter is expanding.

In Fresno, police chief Paco Balderrama is looking to increase its coverage, at a cost of $1m (£0.7m) a year.

"What if ShotSpotter only saves one life in a given year? Is it worth a million dollars? I would argue it is," he says.

The debate around ShotSpotter is hugely complex - and has important potential ramifications for community policing in America.

It's unlikely to go away until the tech's accuracy is independently verified and the data peer reviewed.

Watch Our World this weekend on the BBC News Channel and BBC World News to learn more on the technology behind the story. You can follow James Clayton on Twitter here @jamesclayton5, external.

Related topics

- Published7 May 2021