AI: War crimes evidence erased by social media platforms

- Published

Ihor Zakharenko's videos from Ukraine were swiftly removed

Evidence of potential human rights abuses may be lost after being deleted by tech companies, the BBC has found.

Platforms remove graphic videos, often using artificial intelligence - but footage that may help prosecutions can be taken down without being archived.

Meta and YouTube say they aim to balance their duties to bear witness and protect users from harmful content.

But Alan Rusbridger, who sits on Meta's Oversight Board, says the industry has been "overcautious" in its moderation.

The platforms say they do have exemptions for graphic material when it is in the public interest - but when the BBC attempted to upload footage documenting attacks on civilians in Ukraine, it was swiftly deleted.

Artificial intelligence (AI) can remove harmful and illegal content at scale. When it comes to moderating violent images from wars, however, machines lack the nuance to identify human rights violations.

Ihor Zakharenko, a former travel journalist, encountered this in Ukraine. Since the Russian invasion he has been documenting attacks on civilians.

The BBC met him in a suburb of Kyiv where one year ago men, women and children had been shot dead by Russian troops while trying to flee occupation.

He filmed the bodies - at least 17 of them - and burnt-out cars.

He wanted to post the videos online so the world would see what happened and to counter the Kremlin's narrative. But when he uploaded them to Facebook and Instagram they were swiftly taken down.

"Russians themselves were saying those were fakes, [that] they didn't touch civilians, they fought only with the Ukrainian army," Ihor said.

We uploaded Ihor's footage on to Instagram and YouTube using dummy accounts.

Instagram took down three of the four videos within a minute.

At first, YouTube applied age restrictions to the same three, but 10 minutes later removed them all.

Videos documenting Russian attacks on civilians were taken down within minutes

We tried again - but they failed to upload altogether. An appeal to restore the videos on the basis that they included evidence of war crimes was rejected.

Key figures within the industry say there is an urgent need for social media companies to prevent this kind of information from vanishing.

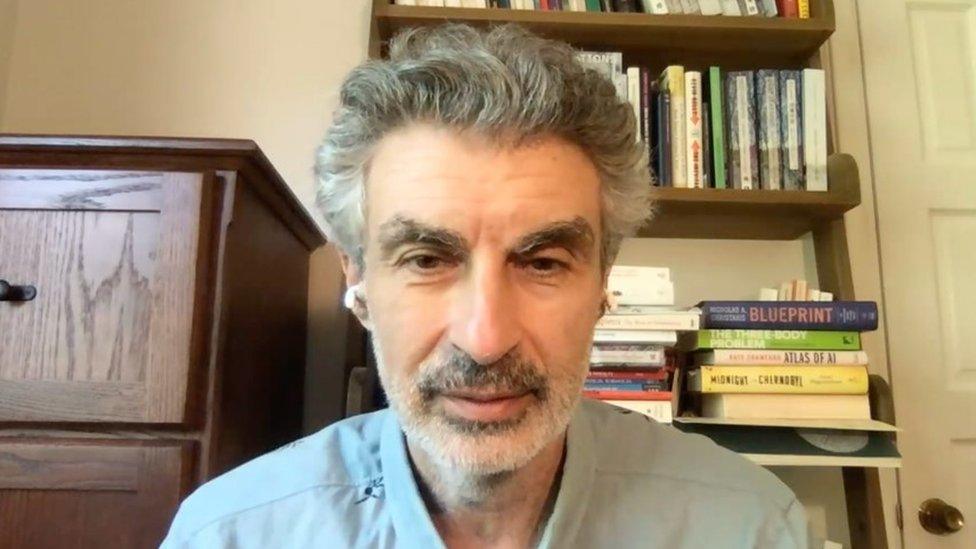

"You can see why they have developed and train their machines to, the moment they see something that looks difficult or traumatic, to take it down," Mr Rusbridger told the BBC. The Meta Oversight Board that he sits on was set up by Mark Zuckerberg and is known as a kind of independent "supreme court" for the company, which owns Facebook and Instagram.

"I think the next question for them is how do we develop the machinery, whether that's human or AI, to then make more reasonable decisions," Mr Rusbridger, a former editor-in-chief of the Guardian, adds.

No-one would deny tech firms' right to police content, says US Ambassador for Global Criminal Justice Beth Van Schaak: "I think where the concern happens is when that information suddenly disappears."

Atrocities from war are being documented on social media. This material can be used as evidence to help prosecute war crimes. But the BBC has spoken to people affected by violent conflict who have seen the major social media companies take down this content.

YouTube and Meta say that under their exemptions for graphic war footage in the public interest, content that would normally be removed can be kept online with viewing restricted to adults. But our experiment with Ihor's videos suggest otherwise.

Meta says it responds "to valid legal requests from law enforcement agencies around the world" and "we continue to explore additional avenues to support international accountability processes… consistent with our legal and privacy obligations".

YouTube says that while it has exemptions for graphic content in the public interest, the platform is not an archive. It says, "Human rights organisations; activists, human rights defenders, researchers, citizen journalists and others documenting human rights abuses (or other potential crimes) should observe best practices for securing and preserving their content."

The BBC also spoke to Imad, who owned a pharmacy in Aleppo, Syria, until a Syrian government barrel bomb landed nearby in 2013.

He recalls how the blast filled the room with dust and smoke. Hearing cries for help, he went to the market outside and saw hands, legs and dead bodies covered in blood.

Local TV crews captured these scenes. The footage was posted on YouTube and Facebook but has subsequently been taken down.

In the mayhem of the conflict, Syrian journalists told the BBC their own recordings of the original footage were also destroyed in bombing raids.

Years later, when Imad was applying for asylum in the EU, he was asked to provide documents that proved he was at the scene.

"I was sure that my pharmacy was captured on camera. But when I went online, it was taking me to a deleted video."

In response to this sort of incident, organisations like Mnemonic, a Berlin-based human rights organisation, have stepped in to archive footage before it disappears.

Mnemonic developed a tool to automatically download and save evidence of human rights violations - first in Syria and now in Yemen, Sudan and Ukraine.

They have saved more than 700,000 images from war zones before they were removed from social media, including three videos showing the attack near Imad's pharmacy.

Each image might hold a key clue to uncover what really transpired on the battlefield - the location, the date or the perpetrator.

But organisations like Mnemonic cannot cover every area of conflict around the world.

Proving that war crimes have been committed is incredibly hard - so getting as many sources as possible is vital.

"Verification is like solving a puzzle - you put together seemingly unrelated pieces of information to build a bigger picture of what happened," says BBC Verify's Olga Robinson.

The task of archiving open-source material - available to pretty much anyone on social media - often falls to people with a mission to help their relatives caught up in violent conflict.

Rahwa says it is her "duty" to archive open-source material from the conflict in the Tigray region of Ethiopia

Rahwa lives in the United States and has family in the Tigray region of Ethiopia, which has been wracked with violence in recent years, and where the authorities in Ethiopia tightly control the flow of information.

However, social media means there is a visual record of a conflict that might otherwise remain hidden from the outside world.

"It was our duty," says Rahwa. "I spent hours doing research, and so when you're seeing this content trickle in you're trying to verify using all the open-source intelligence tools you can get your hands on, but you don't know if your family is OK."

Human rights campaigners say there is an urgent need for a formal system to gather and safely store deleted content. This would include preserving metadata to help verify the content and prove it hasn't been tampered with.

Ms Van Schaak, the US Ambassador for Global Criminal Justice, says: "We need to create a mechanism whereby that information can be preserved for potential future accountability exercises. Social media platforms should be willing to make arrangements with accountability mechanisms around the world."

Additional reporting by Hannah Gelbart and Ziad Al-Qattan

Read more about BBC Verify: Explaining the 'how' - the launch of BBC Verify

- Published30 May 2023

- Published31 May 2023

- Published2 May 2023