Instagram boss to meet health secretary over self-harm content

- Published

After Molly Russell took her own life, her family discovered distressing material about suicide on her Instagram account

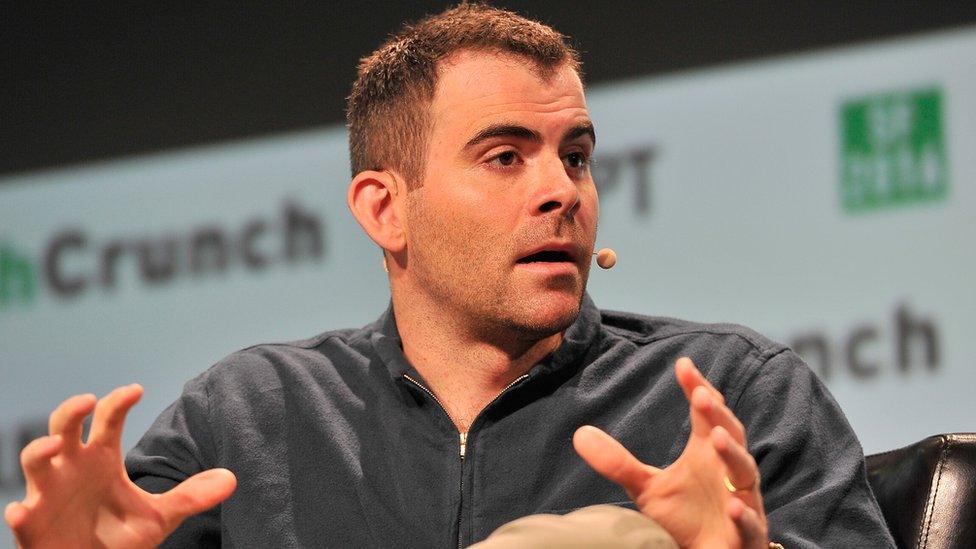

The boss of Instagram will meet the health secretary this week over the platform's handling of content promoting self-harm and suicide.

It comes after links were made between the suicide of teenager Molly Russell and her exposure to harmful material.

Instagram's boss, Adam Mosseri, said it would begin adding "sensitivity screens" which hide images until users actively choose to look at them.

But he admitted the platform was "not yet where we need to be" on the issue.

The sensitivity screens will mean certain images, for example of cutting, will appear blurred and carry a warning to users that clicking on them will open up sensitive content which could be offensive or disturbing.

'Deeply moved'

Molly, 14, took her own life in 2017. When her family looked into her Instagram account they found distressing material about depression and suicide.

Her father told the BBC he believed the Facebook-owned platform had "helped kill my daughter".

Mr Mosseri will meet Matt Hancock on Thursday. The health secretary said recently that social media firms could be banned if they failed to remove harmful content.

Writing in the Daily Telegraph,, external Mr Mosseri said Molly's case had left him "deeply moved" and he accepted Instagram had work to do.

"We rely heavily on our community to report this content, and remove it as soon as it's found," he wrote.

"The bottom line is we do not yet find enough of these images before they're seen by other people."

Instagram boss Adam Mosseri will answer questions over the company's practices

He said the company began a comprehensive review last week and was investing in technology "to better identify sensitive images" as part of wider plans to make posts on the subject harder to find.

"Starting this week we will be applying sensitivity screens to all content we review that contains cutting, as we still allow people to share that they are struggling even if that content no longer shows up in search, hashtags or account recommendations."

Instagram has previously said it doesn't automatically remove distressing content because it had been advised by experts that allowing users to share stories and connect with others could be helpful for their recovery.

Libby used to post images of her self-harm injuries on Instagram

Mr Hancock's meeting will take place on the same day that chief medical officer, Dame Sally Davies, will release a report looking into the links between mental health issues and social media.

Meanwhile, a government white paper, expected this winter, will set out social media companies responsibilities to its users.

A statement from the Department for Digital, Culture, Media and Sport said: "We have heard calls for an internet regulator and to place a statutory 'duty of care' on platforms, and are seriously considering all options."

Separate reports by the Children's Commissioner for England and House of Commons Science and Technology Committee have called on social media firms to take more responsibility for the content on their platforms.

If you’ve been affected by self-harm, or emotional distress, help and support is available via the BBC Action Line.

- Published31 January 2019

- Published22 January 2019

- Published31 January 2019