Election polling errors blamed on 'unrepresentative' samples

- Published

The failure of pollsters to forecast the outcome of the general election was largely due to "unrepresentative" poll samples, an inquiry has found.

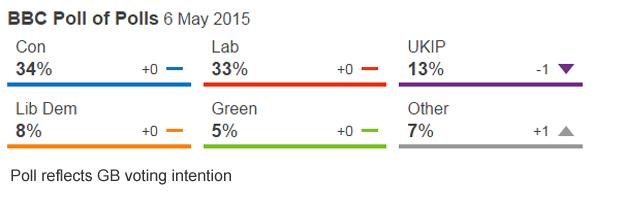

The polling industry came under fire for predicting a virtual dead heat when the Conservatives ultimately went on to outpoll Labour by 36.9% to 30.4%.

A panel of experts has concluded this was due to Tory voters being under-represented in phone and online polls.

But it said it was impossible to say whether "late swing" was also a factor.

The majority of polls taken during last year's five-week election campaign suggested that David Cameron's Conservatives and Ed Miliband's Labour were neck-and-neck.

This led to speculation that Labour could be the largest party in a hung parliament and could potentially have to rely on SNP support to govern.

But, as it turned out, the Conservatives secured an overall majority in May for the first time since 1992, winning 99 more seats than Labour, their margin of victory taking nearly all commentators by surprise.

'Statistical consensus'

The result prompted the polling industry to launch an independent inquiry into the accuracy of their research, the reasons for any inaccuracies and how polls were analysed and reported.

An interim report by the panel of academics and statisticians found that the way in which people were recruited to take part - asking about their likely voting intentions - had resulted in "systematic over-representation of Labour voters and under-representation of Conservative voters".

These oversights, it found, had resulted in a "statistical consensus".

How opinion polls work

The exit poll conducted on election day itself came much closer to the ultimate result than any of those conducted in the run-up

Most general election opinion polls are either carried out over the phone or on the internet. They are not entirely random - the companies attempt to get a representative sample of the population, in age and gender, and the data is adjusted afterwards to try and iron out any bias, taking into account previous voting behaviour and other factors.

But they are finding it increasingly difficult to reach a broad enough range of people. It is not a question of size - bigger sample sizes are not necessarily more accurate.

YouGov, which pays a panel of thousands of online volunteers to complete surveys, admitted they did not have access to enough people in their seventies and older, who were more likely to vote Conservative. They have vowed to change their methods., external

Telephone polls have good coverage of the population, but they suffer from low response rates - people refusing to take part in their surveys, which can lead to bias.

This, it said, was borne out by polls taken after the general election by the British Election Study and the British Social Attitudes Survey, which produced a much more accurate assessment of the Conservatives' lead over Labour.

NatCen, who conducted the British Social Attitudes Survey, has described making "repeated efforts" to contact those it had selected to interview - and among those most easily reached, Labour had a six-point lead.

However, among the harder-to-contact group, who took between three and six calls to track down, the Conservatives were 11 points ahead.

'Herding'

Former Liberal Democrat leader Lord Ashdown, who famously dismissed the election night exit poll with a promise to eat his hat if it was true, said the polls had a "considerable" impact on the way people voted.

He told BBC Radio 4's the World At One: "The effect of the polls was to hugely increase the power of the Conservative message and hugely decrease the power of the Liberal Democrat message, which was you need us to make sure they don't do bad things."

The peer claimed the "mood of the nation" was for another coalition and voters were "surprised" when the Conservatives won outright.

"I think, therefore, there is an argument to be made that it actually materially altered the outcome of the election."

Evidence of a last-minute swing to the Conservatives was "inconsistent", the polling experts said, and if it did happen its effect was likely to have been modest.

They also downplayed other potential explanations for why the polls got it wrong, such as misreporting of voter turnout, problems with question wording or how overseas, postal or unregistered voters were treated in the polls.

However, the panel said it could not rule out the possibility of "herding" - where firms configured their polls in a way that caused them to deviate less than could have been expected from others given the sample sizes. But it stressed that did not imply malpractice on behalf of the firms concerned.

Prof Patrick Sturgis, director of the National Centre for Research Methods at the University of Southampton and chair of the panel, told the BBC: "They don't collect samples in the way the Office for National Statistics does by taking random samples and keeping knocking on doors until they have got enough people.

"What they do is get anyone they can and try and match them to the population... That approach is perfectly fine in many cases but sometimes it goes wrong."

Prof Sturgis said that sort of quota sampling was cheaper and quicker than the random sampling done by the likes of the ONS, but even if more money was spent - and all of the inquiry's recommendations were all implemented - polls would still never get it right every time.

Analysis by the BBC's political editor Laura Kuenssberg

I remember the audible gasp in the BBC's election studio when David Dimbleby read out the exit poll results.

But for all that the consequences of that startling result were many and various, the reasons appear remarkably simple.

Pollsters didn't ask enough of the right people how they planned to vote. Proportionately they asked too many likely Labour voters, and not enough likely Conservatives

Politics is not a precise science and predicting how people will vote will still be a worthwhile endeavour. Political parties, journalists, and the public of course would be foolish to ignore them. But the memories and embarrassment for the polling industry of 2015 will take time to fade.

Read Laura's blog on how the pundits and pollsters got it wrong

Joe Twyman, from pollster YouGov, told the BBC it was becoming increasingly difficult to recruit people to take part in surveys - despite, in YouGov's case, paying them to do so - but all efforts would be made to recruit subjects in "a more targeted manner".

"So more young people people who are disengaged with politics, for example, and more older people. We do have them on the panel, but we need to work harder to make sure they're represented sufficiently because it's clear they weren't at the election," he said.

On Tuesday, the Lords approved a bill which would create a new watchdog to regulate future polling by specifying sampling methods, producing guidance on the wording of questions and deciding whether there should be a moratorium on polls in the run-up to elections.

But the private member's bill, tabled by Labour's Lord Foulkes, has yet to be introduced to the Commons and is unlikely to become law due to lack of parliamentary time.

- Published19 January 2016

- Published14 January 2016

- Published25 June 2015

- Published8 May 2015

- Published17 May 2015