Meet the 'ethical' robots that behave like penguins

- Published

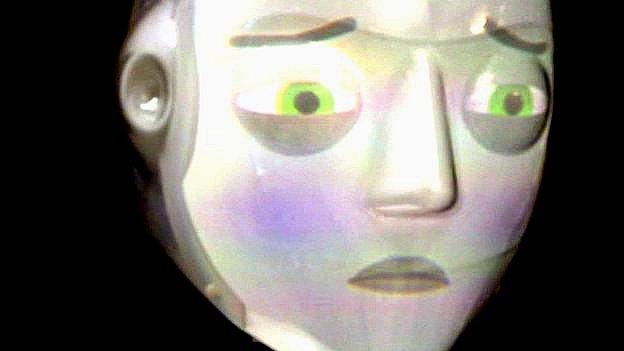

Chris Headleand asks whether it is possible to create robots that seem to be ethical

From 2001: A Space Odyssey's HAL to the Terminator's T-101, science fiction is full of cautionary tales about the dangers posed to man by robots.

In April, the United Nations held a summit in Geneva to examine the future of so-called lethal autonomous weapons systems, with some groups calling for an international ban on killer robots.

But is it possible to create robots that are, or at least seem, ethical?

That is the question being posed by one PHD student at Bangor University in Gwynedd - and with surprising results.

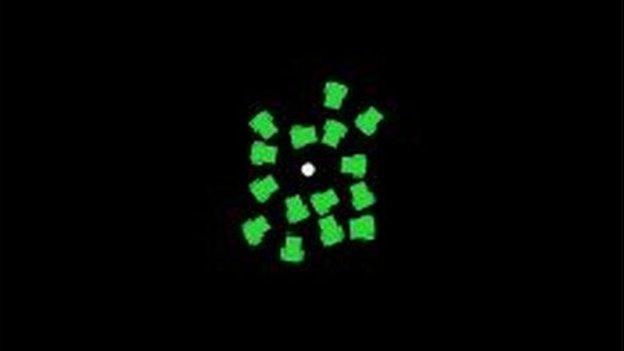

Chris Headleand's automated artificial beings crowd around a power resource in a simulation

Christopher Headleand, 30, is researching how electronic "agents" with the most simple programming can be made to behave in a way that may appear to be moral.

"The best we can do at the moment is to attempt to simulate ethical behaviour. We are not saying these robots are ethical but in some situations they can behave in a way which appears to an observer as ethical," he said.

"If it looks like a duck, and quacks like a duck, for the purposes of a simulation, I'm willing to accept it's a duck."

Virtual simulations

He tests the agents - unconstructed, motor-operated vessels with simple sensors - using virtual simulations.

These test environments allow him to see how the automated artificial beings interact when they are programmed to carry out tasks like reaching a power source - effectively food - before they run out of energy and die.

The tests have seen agents programmed to be hedonistic and self-centred, while others are utilitarian and some are even altruistic.

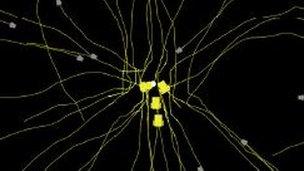

The 'altruistic vessels' are seen here vacating the path to a power resource for others, with the grey marks showing the spots where they have selflessly died

In the case of the latter, Mr Headleand said: "We saw some agents that were sacrificing themselves to save others.

"If you start trying to describe this using language from psychology rather than engineering, that's the point where it becomes quite interesting."

'Panicked and swerving'

Mr Headleand pointed to the way penguins sometimes huddle to share and conserve heat in the wild, adding: "We were getting behaviour that was very similar.

"We were observing emergent behaviour such as different social classes of agents.

"Agents who were closest to the resources were really calm.

"But what was interesting, [those on] the outer circle, on the outer edges of the resources, were panicked and swerving around and constantly trying to dive in."

He added: "You start to look at these agents as simulated life, you start to anthropomorphise them."

Mr Headleand - whose work is supported by Fujitsu - said the use of robots in human affairs was becoming far more common and he believes "ethical machines" could one day play a part in certain industries, including manufacturing and the care sector.

"We are now moving towards the fact that it's life a lot more, in an everyday sense.

"But there is a safety implication there. How can humans work with these robots? How can we interact with them?

"Perhaps people would be a lot more comfortable working with robots if they displayed behaviour that appeared to be ethical."

- Published23 January 2015

- Published3 March 2015

- Published17 May 2013

- Published19 June 2014