Five questions we should ask ourselves before AI answers them for us

- Published

Artificial intelligence is already here.

If you clicked on this article from Facebook, a form of AI decided to put it in your newsfeed.

Listened to recommended music on Spotify? AI played a part in that too.

There's 'narrow' AI like this, and then there's the AI of the future.

The tone of the debate can at times be tense, dominated by warnings about a robot apocalypse.

We spoke to Joanna Bryson, an expert in artificial intelligence at Bath University, about the questions and ethics surrounding AI.

Daniel Ek, CEO of Spotify

1) Is it a problem if AI can predict what we're going to do?

Joanna thinks we should think carefully about this kind of AI.

She tells Newsbeat: "The same things we like when Amazon recommends what we might like to buy, allows advertising to manipulate us. It allows people to control the world differently."

No, she's not talking about this Amazon

The algorithms social networks like Facebook use to "improve" our experience may also shape what news we consume. It may influence who we follow, altering our future experiences of the news.

It's not just about predictions though, says Joanna. It's about data.

2) Do we need data police?

These are units for storing digital data

It's hard to go a day without telling a company or organisation something about yourself. Even using a loyalty card allows a supermarket to build up a picture of what you like. This could be a problem, says Joanna.

"If your data is out there people have power over you, because they can tell more about you," she says.

"Companies like to hold all the information. As long as they have that information though, governments can demand it, or hackers can get into it."

Joanna says in terms of data "it's a wild west out there". We may be able to police it properly in the future though.

"Google and Apple want people to trust them so they are trying to develop the kind of algorithms that make it harder to pull this kind of stuff out," she says.

"It's a wild west in terms of data out there" says Joanna

Using the example of calling the police after finding out you've been burgled, she says similar laws could be created for data.

"We could say 'we don't want you to come in and use our data for anything other than what we've licensed you to use it for,'" she explains.

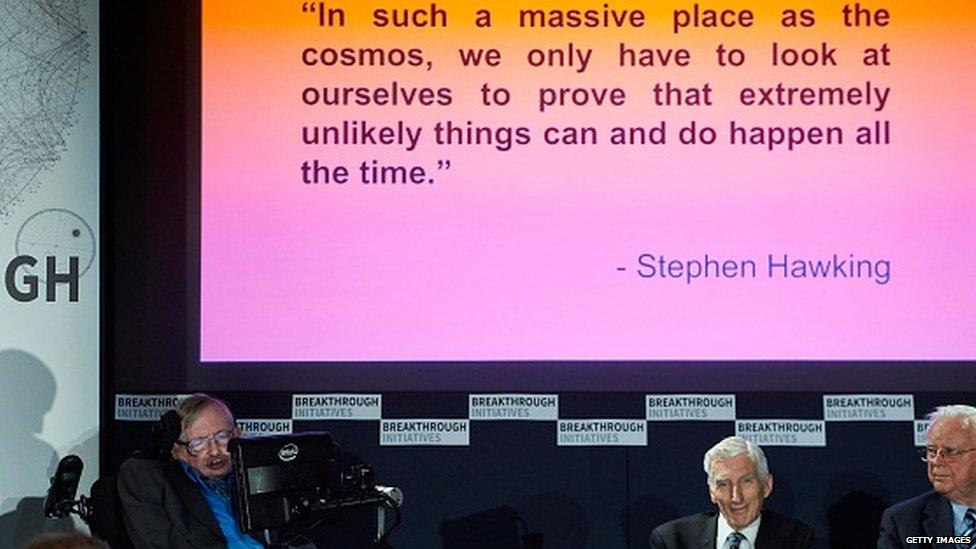

These are issues we face now according to Joanna. But what about the future? Only recently Stephen Hawking warned that artificial intelligence could end mankind.

Prof Stephen Hawking said efforts to create thinking machines posed a threat to our very existence (he's talking about the cosmos at this conference though).

3) Is there a danger AI is going to take over the world?

Saying that because something is intelligent it will try to rule the world is silly, says Joanna.

"There's a lot of really intelligent people who aren't trying to take over the world. There's a broken idea that anything that's intelligent will want will have the same kind of ambitions as we have," she says.

She offers the example of a cheetah.

"The idea that just because humans are the smartest animal, anything that is intelligent will become a person is broken. That's like thinking any animal that becomes fast will turn into a cheetah."

Joanna uses the example of a cheetah

People often become confused between artificial intelligence that works like human intelligence and AI that behaves like a human being. It's one of the reasons there's a debate about making robots similar to humans.

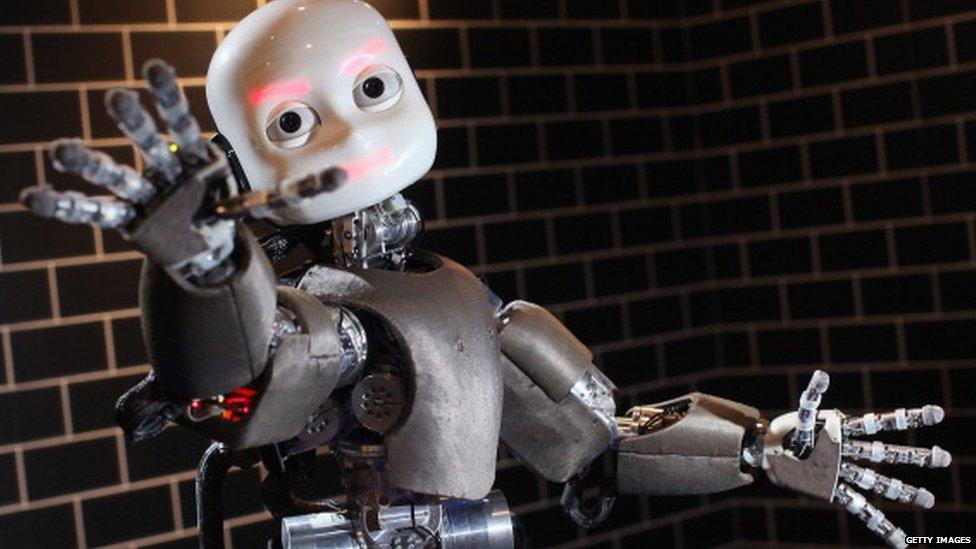

4) Should we be making AI that behaves like humans?

A humanoid robot. Japanese scientists unveiled what this news-reading android, supposedly possessing a sense of humour to match her perfect language skills, in 2014

Joanna says no.

"What people seem to want is better friends or something and I think that's incoherent. Of course we have a craving for good friends but why do you want one that you can turn off?"

She says there's other problems with building a robot AI that behaves like a human.

"If we intentionally try and make something like people I think that is ethically wrong. Everything that we have come to think is ethical is based around protecting humans or things like humans."

Joanna says there are problems with building an AI that behaves like a human

She says this could lead to robot AIs needing rights.

"There's no reason to make a machine that is so much like humans that we need to protect it. We're morally obliged not to make AI that we are morally obliged to."

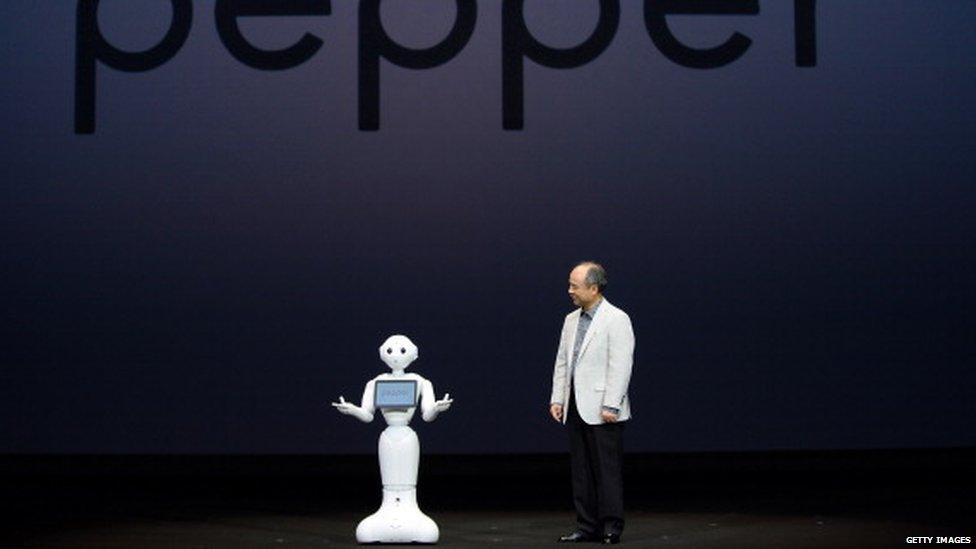

Masayoshi Son, president of Japan's mobile carrier SoftBank, introduces the humanoid robot 'Pepper'

Building a robot that looks like a human confuses people and it can affect how we treat each other, she adds.

"If you have a confusion between what the AI is and what the human is and you treat it badly then you are more likely to treat humans badly including yourself. It's pretty well accepted that that's a bad thing. You don't want to treat something that reminds you of a human in a bad way."

5) AI is and could be powerful. Should we have a campaign to stop the killer robots?

"I don't like the word killer robot. It's not the robots that are killing, it's the people that are killing with them. We build the machine, then we blame it for having done something when we made the decision to build the machine and someone made the decision to use the machine."

She says sophisticated weapons can affect warfare in different ways. If a nation is sending machines on missions instead of human soldiers, it makes less of an impact on the citizens of the country sending in the machines. This in turn can affect public attitudes towards warfare.

"There are a lot of issues around how we conduct war and whether what we are doing now is OK," she says.

Not everyone agrees with her though. You can find out some of the other arguments about killer robots here.

Follow @BBCNewsbeat, external on Twitter, BBCNewsbeat, external on Instagram, Radio1Newsbeat, external on YouTube and you can now follow BBC_Newsbeat on Snapchat