Could an algorithm help prevent murders?

- Published

Algorithms are increasingly used to make everyday decisions about our lives. Could they help the police reduce crime, asks David Edmonds.

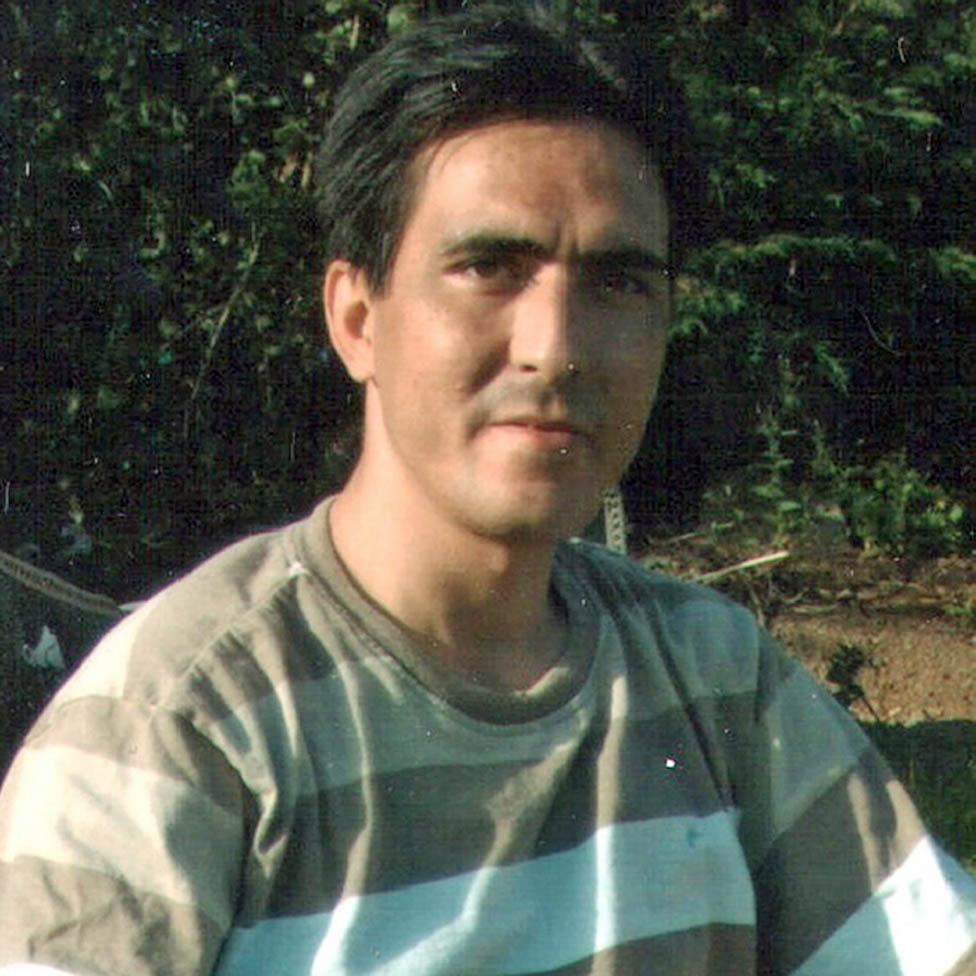

In July 2013, a 44-year-old man, Bijan Ebrahimi, was punched and kicked to death in south Bristol. His killer, a neighbour, then poured white spirit over his body and set it alight on grass 100 yards from his home. Iranian-born Ebrahimi, who'd arrived in Britain over a decade earlier as a refugee, had been falsely accused by his killer of being a paedophile.

On 73 occasions, over an eight-year-period, Ebrahimi had reported to the police that he'd been the victim of racially motivated crimes.

His complaints went unheeded and a report into his murder concluded that both Bristol City Council and the police were guilty of institutional racism.

Bijan Ebrahimi

"That was really a turning point from a data perspective," says Jonathan Dowey, who heads a small team of data analysts at Avon and Somerset Police. The question the force began to ask, he says, was: "Could we be smarter with our data?"

A Freedom of Information trawl by the civil liberties group Liberty recently discovered that at least 14 police forces in the United Kingdom have used, or are planning to use, algorithms - computer mathematical formulae - to tackle crime. It seems probable that within a few years almost all police forces will be using such methods.

After Ebrahimi's murder, Avon and Somerset Police began to experiment with software to see whether it could help identify risks - risks from offenders, of course, but also risks to potential victims like Ebrahimi.

Find out more

Listen to David Edmonds' documentary Can computer profiles cut crime? on BBC Radio 4's Analysis at 20:30 on Monday 24 June

Humans are susceptible to all manner of biases. And unlike computers, they're not good at spotting patterns - whether, for example, there have been multiple calls from the same address. When Avon and Somerset retrospectively ran a predictive model to see if the tragedy could have been averted, Ebrahimi's address popped up as one of the top 10 raising concern.

Different police forces are trialling different algorithms. West Midlands uses software to spot patterns between crime and time of year, day of the week and time of the day that crimes are committed. West Yorkshire is working on a system to predict areas at high risk of crime.

Durham Police have been co-operating with Cambridge University in an initiative called Hart - the Harm Assessment Risk Tool. The aim has is to design an algorithm to help predict whether a person arrested for a crime is likely to reoffend or whether they are safe to return to the community. The algorithm allows police to use alternatives to costly and often unsuccessful prosecutions.

As for Avon and Somerset, they're now using algorithms for all manner of purposes. For example, a big drain on police resources is the hunt for people who go missing - so the force is trying to predict who might do so in order that that preventative measures can be taken.

A rather straightforward application of algorithms is the assessment of how many calls they get from members of the public at different times of the day. Finding this out has enabled the force to reduce the percentage of people who hang up before the call is answered from around 20% down to 3% - a significant achievement.

Police forces like Avon and Somerset are receiving tens of thousands of new bits of data every day. "We're in a blizzard of data," says Jonathan Dowey. "It's no longer viable to have an army of humans trying to determine risk and vulnerabilities on their own."

The public may be largely unaware of how algorithms are penetrating every aspect of the criminal justice system - including, for example, a role in sentencing and in determining whether prisoners get parole - but civil liberties groups are becoming increasingly alarmed. Hannah Couchman of Liberty says that "when it comes to predictive policing tools, we say that their use needs to cease".

One worry is about human oversight. Avon and Somerset Police emphasise that in the end algorithms are only advisory, and that humans will always retain the final decision.

But while this is the current practice, it seems quite possible that as police forces become more accustomed to the use of algorithms their dependence upon them will grow. It's not an exact analogy, but drivers who routinely use satellite navigation systems tend to end up believing that the sat-nav knows best.

Another cause of unease about algorithms concerns transparency. Algorithms are informing vital decisions taken about peoples' lives. But if the computer suggests that someone is at high risk of re-offending, justice surely requires that the process by which this calculation is reached be not only accessible to humans but also open to challenge.

An even thornier issue is algorithmic bias. Algorithms are based on past data - data which has been gathered by possibly biased humans. As a result, the fear is that they might actually come to entrench bias.

There are many ways in which this might occur. Suppose, for example, that one of the risk-factors weighed up in an algorithm is "gang membership". It's possible that police might interpret behaviour by white and black youths differently - and so be more likely to identify young black men as members of gangs. This discriminatory practice could then be embedded in the software.

Which variables should go into an algorithm is hugely contentious territory. Most experts on police profiling want to exclude race. But what about sex, or age? What makes one variable inherently more biased than another? What about postcode? Durham initially included postcode in their Hart tool, but then removed it following opposition. Why should people be assessed based upon where they lived, the objection ran - would this not discriminate against people in less desirable neighbourhoods?

American-born Lawrence Sherman, a criminology professor at the University of Cambridge, has been obsessed with policing ever since he watched the brutal police attacks on civil rights activists in the US in the 1960s. Now a leading figure in the push for empirically based policing, he concedes that by excluding certain factors such as race or postcode, the accuracy of the algorithm might be compromised.

But that, he says, is less critical than keeping the public on board: "You are making [the algorithm] less accurate to a small degree, and more legitimate to a much larger degree."

What's remarkable is that there is no legislation specifically governing the use of police algorithms.

An advisory tool, called Algo-Care, has been developed by various academics and endorsed by the National Police Chief's Council. It lays out various voluntary principles governing, for example, transparency, bias and human oversight and it recommends any algorithm be re-tested over time to ensure accuracy. Six police forces are already using it. But one of the authors of Algo-Care, Jamie Grace, says it can only serve as an interim solution: "What we need is government to take the lead," he says.

If we get police algorithms wrong, there's potential for scandal and injustice. If we can get them right, there's the promise of big pay-offs. Dr Peter Neyroud, a former Chief Constable now based at Cambridge's Institute of Criminology, says the Institute's analysis "suggests that only 2.2% of offenders can be expected to go on to commit a high-harm offence in the future". If we could accurately pinpoint this tiny percentage, we could drastically cut the prison population.

The causes of crime are complex, but since Avon and Somerset Police have begun to use algorithms, there's been an 11% fall in reports of missing people and a 10% fall in burglary.

As for the problem of algorithmic bias, there's a more optimistic scenario. In theory - and hopefully also in practice - we could reduce the impact of human prejudice. Take the case of Bijan Ebrahimi. The police failed to recognise the danger - an algorithm could have highlighted it. Algorithms, in other words, could save lives.

You may also be interested in...

Criminals in the US can be given computer-generated "risk scores" that may affect their sentences. But are the secret algorithms behind them really making justice fairer?