CES 2012: New interface controls challenge status quo

- Published

Puppet playing can only happen thanks to highly sophisticated bouncing light technology

On screen two animated "puppets" are wriggling around.

As the big blue hippo and thin red worm dance from side to side, on the bottom right of the screen their movements match shapes cast by images of two fluorescent green hands.

These in turn mirror actions being taken by SoftKinetic's demonstrator which is gesticulating wildly just 15cm in front of the firm's new DepthSense camera.

Gesture-controlled interfaces are nothing new. Microsoft's Kinect sensor became the world's fastest selling consumer electronics device when it was launched for the Xbox console in late 2010 - and this year's Consumer Electronics Show is jam packed with companies putting it to alternative uses.

However, what makes SoftKinetic's device stand out is the fact that the user can be close to the sensor - making it suitable as a control mechanism for laptops and tablets.

"The technology is very different from what Microsoft is using," the Belgian firm's chief executive Michael Tombroff tells the BBC.

"We use something called Time-of-Flight which is our own patented technology which is more suited for close interactions."

3D displays

The system works by projecting light into space which then bounces back to the camera. The sensor calculates the light's "time of flight" to work out the how far away the various parts of the users' body are.

"We can adjust the illumination according to the distance," Mr Tombroff explains.

"The further you want to see objects the more illumination you need because they are far away, the closer they are the lower the illumination. Time of flight is very good for both ends of the spectrum."

SoftKinetic say their device is "very different" to Microsoft's Kinect

The firm's ultimate ambition is to shrink its camera so that it is small enough to fit into ultrabooks and smartphones, and license out the technology to manufacturers.

Beyond hand puppet animations and gaming it suggests more serious applications such as computer aided design and the control of 3D displays.

"You will see objects coming out of the screen... and you will be able to grab and play with them," said Mr Tombroff.

At the start of the Las Vegas trade show, the organiser - the US's Consumer Electronics Association - predicted that this would be "the year of the interface".

It said the companies would aim to make operating devices a "seamless, natural experience" in which the technology involved would "move into the background."

The proliferation of gesture-controlled devices on show has borne that out. Examples include booths in which shoppers can spin around avatars of themselves wearing virtual clothes and robots whose movements are controlled with a wave of the hand.

New uses of voice recognition have been the other major development, albeit with mixed results.

Windows Phone's senior product manager, Derek Snyder, had a nasty moment when his demo of the operating system's speech recognition facility briefly went awry in front of an audience of thousands at the firm's keynote presentation.

Show-and-tells of Samsung's new voice controlled television set also proved glitchy at times, with misunderstood commands and the system refusing to acknowledge instructions if unapproved words were added to requests.

These issues are likely to be worked out as natural speech recognition software advances.

However, some firms have believe it is wise to resist the technology at present.

Keeping it simple

Daimler's Mercedes Benz USA division has opted for a flywheel control for its new mbrace 2 dashboard system, unlike its rival Ford whose Sync system focuses on voice.

Mercedes' "command controller" can be spun around and moved up and down to act as a toggle or cursor for its display unit which provides access to internet services including Facebook, Google, Yelp and Associated Press news.

The new Mercedes SL-class will be the first model to be equipped with mbrace2 in April - with a wider roll-out happening next year

While the firm has used similar control mechanisms in the past, its key innovation is the interface it controls which strips down apps down to their basics.

The company put together its own app development team in Silicon Valley's Palo Alto last year. It coded and designed the apps, while keeping in close contact with original developers such as Facebook to ensure they approved of its efforts to make the apps safer to use while driving.

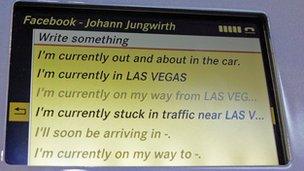

Mbrace 2 users can add Facebook status updates based on pre-written templates

"If you want to post to your wall you can do that using a series of canned messages already plugged in there," explains Robert Policano, the firm's product manager of telematics services.

"The advantage of that is that you don't have to take your eyes off the road for more than a few seconds to access that.

"It will recognise where you are so you can use it to post your estimated time of arrival, where your ultimate destination is, or a [pre-programmed] cheeky message such as 'I'm stuck in darned traffic again.'"

More elaborate messages can be written from scratch, but the system will refuse to accept them unless it detects the car has been parked, to protect the motorist and other road users.

The firm's Google app also offers panoramic images provided by the search firm and other users to help motorists work out where to go. Pre-programmed menu commands can also hunt out nearby petrol stations or points of interest via the internet.

"Keeping [drivers] eyes on the road as much as possible is a top priority," Mr Policano says.

"But the world has changed - people want to bring their digital lives into their cars, they have this craving for information whether it's the weather, the latest stocks, current traffic conditions or who is talking about them on their [Facebook] walls. So with that realisation we said how can we do this in the smartest way possible."

Eye controls

An even smarter alternative may be just around the corner.

Swedish firm Tobii is attending CES for the first time to promote its Eye Tracking technology.

Its sensors measure the position and movement of the users' eyes hundreds of times every second allowing desktop PCs and laptops equipped with its sensors to be controlled by gaze.

Using the equipment feels like the computer has learned how to read your mind.

Pages of text starts to scroll upwards as you reach the bottom part of the screen.

A game of Asteroids can be controlled by flicking your eyes towards each space rock activating laser blasts to prevent the objects from reaching earth.

And objects can be selected from a page full of images by staring at the relevant photograph until it is replicated at the top of the screen - glancing at this copy then expands the picture to fill the whole screen.

The timeless classic arcade game Asteroids can now be powered by flicking your eyes

"We know exactly where you are looking all the time," says the firm's founder and chief technology officer John Elvesjo.

"So you can have interfaces that adapt to your gazepoint. The simplest example is a screen that turns on when you are looking at it and is off when you are not looking at it.

"Or let's say in a car with a heads up display I can minimise the readouts in size and light intensity until you look at them - and then they grow bigger and brighter."

Leaving the 'stone age'

Tobii's technology is already used to help disabled people control computers, but its packages currently retail for around $6,000 (£3,910).

It says costs and sensor sizes must be reduced if the technology is to enter the consumer market, but adds that virtually every games console and PC maker has bought and trialled its technology. China's Lenovo has already produced a concept laptop with the technology built in and Tobii predicts Eyetrack could go mainstream in as little as two years.

It is attending CES to encourage software developers to start work on related apps.

"You have PlayStation's Motion and Xbox's Kinect when you are at a distance, you have touch when you are really up close, but on that middle distance where we are working all the time with our computers, you have nothing," says Mr Elvesjo.

"And you have like a stone age keyboard with a kind of stone age mouse - so it's up for innovation."

Whether Eye Tracking, Time-of-Flight based gestures, voice or some other technology fills that space is still up for grabs.

2012 may not yet be the year of the paradigm changing, glitch-free new interface for the masses - but the future feels tantalisingly close.

- Published8 March 2012

- Published8 March 2012