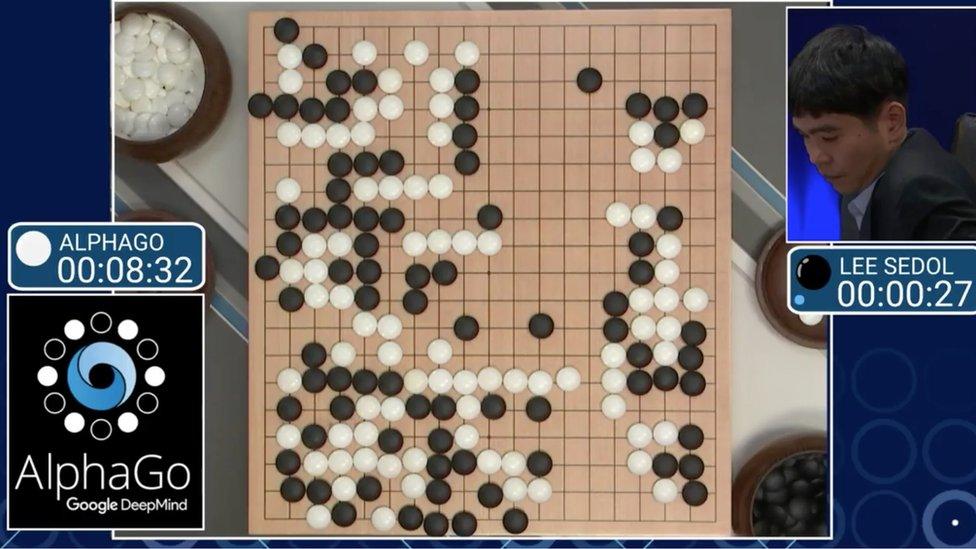

Artificial intelligence: Google's AlphaGo beats Go master Lee Se-dol

- Published

A computer program has beaten a master Go player 3-0 in a best-of-five competition, in what is seen as a landmark moment for artificial intelligence.

Google's AlphaGo program was playing against Lee Se-dol in Seoul, in South Korea.

Mr Lee had been confident he would win before the competition started.

The Chinese board game is considered to be a much more complex challenge for a computer than chess.

"AlphaGo played consistently from beginning to the end while Lee, as he is only human, showed some mental vulnerability," one of Lee's former coaches, Kwon Kap-Yong, told the AFP news agency.

Mr Lee is considered a champion Go player, having won numerous professional tournaments in a long, successful career.

Go is a game of two players who take turns putting black or white stones on a 19-by-19 grid. Players win by taking control of the most territory on the board, which they achieve by surrounding their opponent's pieces with their own.

In the first game of the series, AlphaGo triumphed by a very narrow margin - Mr Lee had led for most of the match, but AlphaGo managed to build up a strong lead in its closing stages.

Lee Se-dol is one of the game's greatest modern players

After losing the second match to Deep Mind, Lee Se-dol said he was "speechless" adding that the AlphaGo machine played a "nearly perfect game".

The two experts who provided commentary for the YouTube stream of for the third game said that it had been a complicated match to follow.

They said that Lee Se-dol had brought his "top game" but that AlphaGo had won "in great style".

The AlphaGo system was developed by British computer company DeepMind which was bought by Google in 2014.

It has built up its expertise by studying older games and teasing out patterns of play. And, according to DeepMind chief executive Demis Hassabis, it has also spent a lot of time just playing the game.

"It played itself, different versions of itself, millions and millions of times and each time got incrementally slightly better - it learns from its mistakes," he told the BBC before the matches started.

This virtuous circle of constant improvement meant the super computer went into the five-match series stronger than when it beat the European champion late last year.

What does this mean for artificial intelligence? Dr Noel Sharkey, AI expert

Artificial Intelligence (AI) has flirted with games since its beginnings, because only smart humans excel. Unlike the real world, a closed system of fixed rules suits computing.

Despite critical voices, Arthur Samuel's draughts playing program was an incredible achievement in 1959. Like AlpahGo it learned by playing itself repeatedly only many orders of magnitude slower.

Then the goal posts moved. The critics said chess was beyond computing's capability because it needed human intuition and creativity. But then when good amateur challengers had to eat their words in the 1970s, the goal post shifted again.

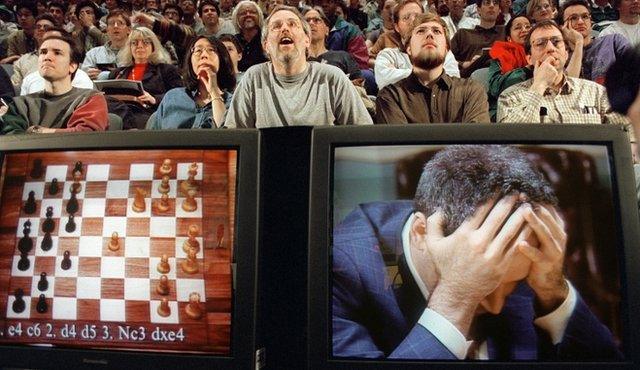

Critics claimed a horizon where computers might beat some professionals but certainly not grand masters. So when IBM's Deep Blue supercomputer beat world champion Garry Kasparov in 1997, the world was astonished. But Deep Blue was not the human-like intelligence that the founding fathers of AI had hoped for. It won by brute force by searching through millions of moves in seconds. Humans have limited memory and need brilliant pattern perception and creative strategies to win.

In 1997 IBM's Deep Blue supercomputer narrowly beat Garry Kasparov at chess

So the critics turned to Go as the impossible. Even with today's vast computer memories and incredibly fast processors (which have doubled more than eight times since Deep Blue), the ancient game will not yield to brute force. The size of the search required for Go is larger than chess by more than the number of atoms in the universe. It is the holy grail of AI gaming.

When Facebook announced earlier this year that their program had beaten a strong Go amateur, jaws dropped in the AI community - and fell to the floor that same day when Google's Deep Mind genius team announced their AlphaGo beat the European champion 5-0.

To beat one of the world's top players, Deep Mind used a mixture of clever strategies to make the search much smaller. They trained their machine on 30 million expert moves to start with, and then the learning machine played against itself millions of times. It worked - the holy grail is in the bag and the goal posts can shift no further.

Does this mean AI is now smarter than us and will kill us mere humans? Certainly not. AlphaGo doesn't care if it wins or loses. It doesn't even care if it plays and it certainly couldn't make you a cup of tea after the game. Does it mean that AI will soon take your job? Possibly you should be more worried about that.

What is Go?

A brief guide to Go

Go is thought to date back to several thousand years ago in China.

Using black-and-white stones on a grid, players gain the upper hand by surrounding their opponents pieces with their own.

The rules are simpler than those of chess, but a player typically has a choice of 200 moves, compared with about 20 in chess - there are more possible positions in Go than atoms in the universe, according to DeepMind's team.

It can be very difficult to determine who is winning, and many of the top human players rely on instinct.

- Published10 March 2016

- Published7 March 2016

- Published28 January 2016

- Published27 January 2016