Microsoft seeks to clean up Xbox game chat with AI

- Published

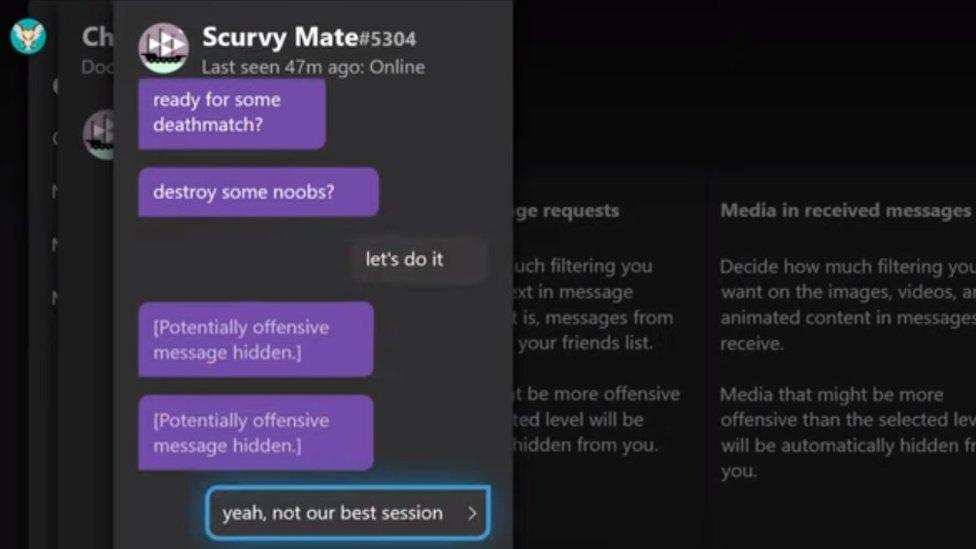

The filtering system hides messages when it judges them to be inappropriate

Microsoft is turning to AI and machine learning to let Xbox gamers filter the language they see in text messages.

It is introducing customisable filters that let gamers choose the vocabulary they see in messages from friends and rival players.

The four levels of filtering range from child-friendly through to entirely unfiltered.

Initially the filters cover just text but will eventually apply to voice chat as well.

Trash talk

In a blog, Microsoft said, external the filters would be trialled with Xbox testers in October and then rolled out to all users of its console later in the year.

Players will be able to decide what level of filtering they want to apply to their interactions with friends or people they take on in games, it said.

Once applied, filters will also apply to Xbox apps on Windows 10 and smartphones as well as the Xbox Game Bar.

Microsoft has introduced four levels of filters - friendly, medium, mature and unfiltered. A warning saying "potentially offensive hidden message" will appear on filtered messages.

The "friendly" setting will be applied by default to all child accounts on Xbox. It screens out all vocabulary that could be offensive.

The "medium" setting will let some robust language through, explained Larry Hyrb, director of programming for Xbox Live, in a video.

"Once you have applied the medium filter you'll notice that you'll still be able to see things like friendly trash talk between friends but vulgar words that we know are intended to bully or discriminate will be filtered out of your gaming experience," he said.

Blizzard has taken steps to improve player behaviour in Overwatch

Microsoft's "mature" rating covers text and messages that are almost always harmful to those who receive them, he added. Gamers can set different levels of filtering for friends and non-friends.

The filtering system should be seen as an adjunct to Microsoft's existing community standards that encourage good behaviour while gaming, it said, adding that players should still report people who exhibited bad behaviour.

Speaking to tech news site The Verge, Rob Smith,, external a program manager on Xbox Live's engineering team, said it was looking at developing systems that measure "toxicity" in online chat that can automatically mute people who are abusing friends and foes.

This system could feed into the Xbox player reputation system where a bad rating can get players banned.

Microsoft's work comes against a background of efforts by many other games firms to curb toxic behaviour - many of these systems have struggled to understand the context in which remarks are made and others have struggled with the slang used by certain groups.

Studies have shown that the problem of abuse is widespread among gamers. An Anti-Defamation League study released in late July, external found that 74% of online players said they had experienced harassment of one form or another.

More than half of the respondents to the ADL study said the abuse was based on "race, religion, ability, gender, gender identity, sexual orientation, or ethnicity".

- Published23 January 2019

- Published28 August 2019

- Published12 July 2019