Social media: How can we protect its youngest users?

- Published

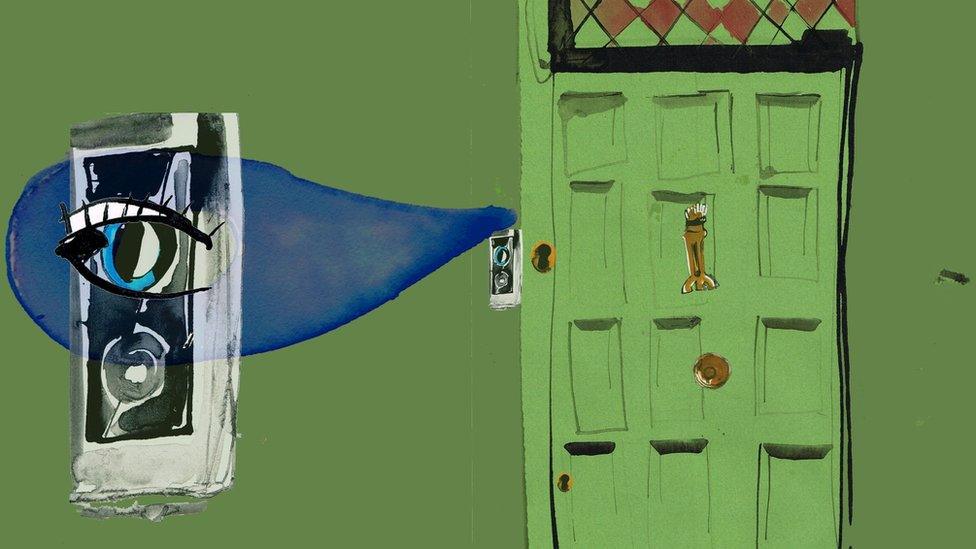

Children searching for content relating to depression and self-harm can be exposed to more of it by the recommendation engines built in to social networks.

Sophie Parkinson was just 13 when she took her own life. She had depression and suicidal thoughts.

Her mother, Ruth Moss, believes Sophie eventually took her own life because of the videos she had watched online.

Like many youngsters, Sophie was given a phone when she was 12.

Ruth recalls discovering soon after that Sophie had been using it to view inappropriate material online.

Sophie Parkinson, pictured here with her mum, killed herself six years ago aged 13

"The really hard bit for the family after Sophie's death was finding some really difficult imagery and guides to how she could take her own life," she says.

Almost 90% of 12 to 15-year-olds have a mobile phone, according to the communications watchdog Ofcom. And it estimates that three-quarters of those have a social media account.

The most popular apps restrict access to under-13s but many younger children sign up and the platforms do little to stop them.

The National Society for the Prevention of Cruelty to Children (NSPCC) thinks the tech firms should be forced by law to think about the risks children face on their products.

"For over a decade, the children's safety has not been considered as part of the core business models by the big tech firms," says Andy Burrows, head of child safety online policy at the charity.

"The designs of the sites can push vulnerable young teenagers, who are looking at suicide or self-harm, to watch more of that type of content."

Recognise and remove

Recently, a video of a young man taking his own life was posted on Facebook.

The footage subsequently spread to other platforms, including TikTok, where it stayed online for days.

TikTok has acknowledged users would be better protected if social media providers worked more closely together.

But Ruth echoes the NSPCC's view and thinks social networks should not be allowed to police themselves.

She says some of the material her daughter accessed six years ago is still online, and typing certain words into Facebook or Instagram brings up the same imagery.

Facebook announced the expansion of an automated tool to recognise and remove self-harm and suicide content from Instagram earlier this week, but has said data privacy laws in Europe limit what it can do.

Other smaller start-ups are also trying to use technology to address the issue.

SafeToWatch is developing software that is trained by machine-learning techniques to block inappropriate scenes including violence and nudity in real-time.

SafeToWatch is designed to detect explicit photos

It analyses the context of any visual material and monitors the audio.

Then company suggests this provides a balanced way for parents to protect their children without intruding too deeply into their privacy.

"We never let parents see what the kid is doing, as we need to earn the trust of the child which is crucial to the cyber-safety process," explains founder Richard Pursey.

'Frank conversations'

Ruth suggests it's often easy to blame parents, adding that safety tech only helps in limited circumstances as children become more independent.

"Most parents can't know what exactly goes on their teenager's mobile phone and monitor what they have seen," she says.

And many experts agree that it is inevitable most children will encounter inappropriate content at some point, so they need to gain "digital resilience".

"Safety online should be taught in the same way as other skills that keep us safe in the physical world," explains Dr Linda Papadopoulos, a psychologist working with the Internet Matters safety non-profit.

"Parents should have frank conversations about the types of content kids might encounter online and teach them ways to protect themselves."

She says the average age children are exposed to pornography is 11. When this happens, she advises, parents should try to discuss the issues involved rather than confiscating the device used to view it.

"Take a pause before you react," she suggests.

- Published11 November 2020

- Published12 November 2020

- Published18 October 2020