AI could worsen cyber-threats, report warns

- Published

Artificial intelligence could increase the risk of cyber-attacks and erode trust in online content by 2025, a UK government report warns.

The tech could even help plan biological or chemical attacks by terrorists, it says.

But some experts have questioned whether the tech will evolve as predicted.

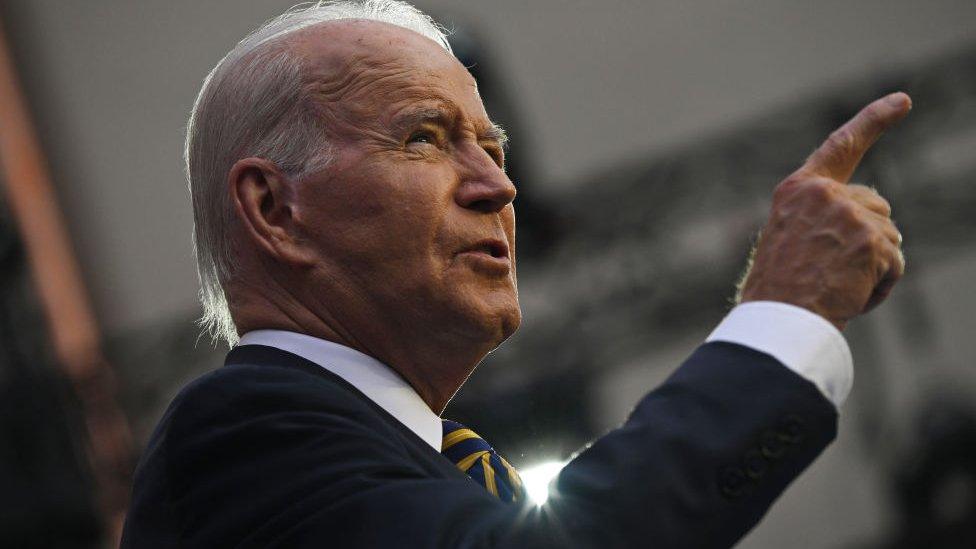

Prime Minister Rishi Sunak is expected to highlight opportunities and threats posed by the technology on Thursday.

The government report looks at generative AI - the type of system that currently powers popular chatbots and image generation software.

It is based in part on declassified information from intelligence agencies.

The report warns that by 2025 generative AI could be "used to assemble knowledge on physical attacks by non-state violent actors, including for chemical, biological and radiological weapons".

It says while firms are working to block this, "the effectiveness of these safeguards vary".

There are obstacles to getting hold of the knowledge, raw materials, and equipment for attacks, but those barriers are falling - potentially accelerated by AI, according to the report.

By 2025, it's likely AI will also help create "faster-paced, more effective and larger scale" cyber-attacks, it warns.

Joseph Jarnecki, who researches cyber threats at the Royal United Services Institute, said that AI could help hackers, especially in overcoming their difficulties in mimicking official language.

"There's a tone that is adopted in bureaucratic language and cybercriminals have found that quite difficult to harness," he told the BBC.

The report comes ahead of a speech by Mr Sunak on Thursday where he is expected to set out how the UK government aims to make AI safe, and establish the UK as a global leader in AI safety.

"AI will bring new knowledge, new opportunities for economic growth, new advances in human capability, and the chance to solve problems we once thought beyond us. But it also brings new dangers and new fears," Mr Sunak is expected to say.

He will commit to address those fears head on, "making sure you and your children have all the opportunities for a better future that AI can bring".

AI meeting

The speech sets the scene for a government summit next week to discuss the threat posed by highly advanced AIs.

It will focus on the regulation of so-called "Frontier AI": powerful future AI systems that ministers say "can perform a wide variety of tasks" and "exceed the capabilities of today's most advanced models".

Whether or not such systems could pose a threat to humanity is a hotly debated.

Another newly published report by the Government Office for Science, which advises the prime minister and cabinet, says "many experts consider this a risk with very low likelihood and few plausible routes to being realised."

It says to pose a risk to human existence, an AI would need some control over vital systems, such as weapons or financial systems.

They would also need new skills such as the capacity to improve their own programming, the ability to evade human oversight and a sense of autonomy.

But it notes "there is no consensus on the timelines and plausibility of when specific future capabilities could emerge".

Rules of the road

The big AI firms have mostly agreed that regulation is necessary, and their representatives are likely to attend the summit.

But Rachel Coldicutt, an expert on the social impact of technology, questioned the focus of the summit.

She said it placed too much weight on future risk: "It makes loads of sense that technology companies, who stand to lose more by being regulated about the things they're making in the here-and-now, will focus on long-term risk."

"And it has felt over the summer, as if the government position has been very strongly aligned, supporting those views," she told the BBC.

But she said the government reports were "moderating some of the the fervour" about these futuristic threats and made it clear that there was a gap between "the political position and the actual technical one".

Related topics

- Published6 October 2023

- Published22 July 2023