'Let police fight crime with facial recognition' plea

- Published

South Wales Police piloted the use of facial recognition in Cardiff - it was later ruled unlawful

Police should be allowed more access to facial recognition technology, a firm developing it for use in the private sector has said.

Last year, appeal court judges ruled a trial project to scan thousands of faces by South Wales Police was unlawful. The force did not appeal.

Welsh company Credas said laws were not keeping up with the latest technology.

The Home Office said it wants police to use new crime-reducing technology while "maintaining public trust".

Credas believes such facial recognition technology could be a vital tool in fighting crime.

"Ten years ago it would have felt space age, but now it's everywhere - just logging into my phone or laptop, we're all used to it now," said chief executive Rhys David.

"But the legislation will never keep up with the technological advancements."

The firm, based in Penarth in the Vale of Glamorgan, works with firms to prevent crime in commercial settings, helping them confirm a client's identity.

It can include estate agents, the legal sector, accountancy or gambling operations - any businesses regulated to reduce fraud and money laundering.

"There's common stories of people buying houses with someone else's identity and manipulating the paperwork so that the funds get transferred into the wrong account and it's too late then - we can't recover that," said Mr David.

"It's a very difficult position to be in, but technologies like ours are closing the gap."

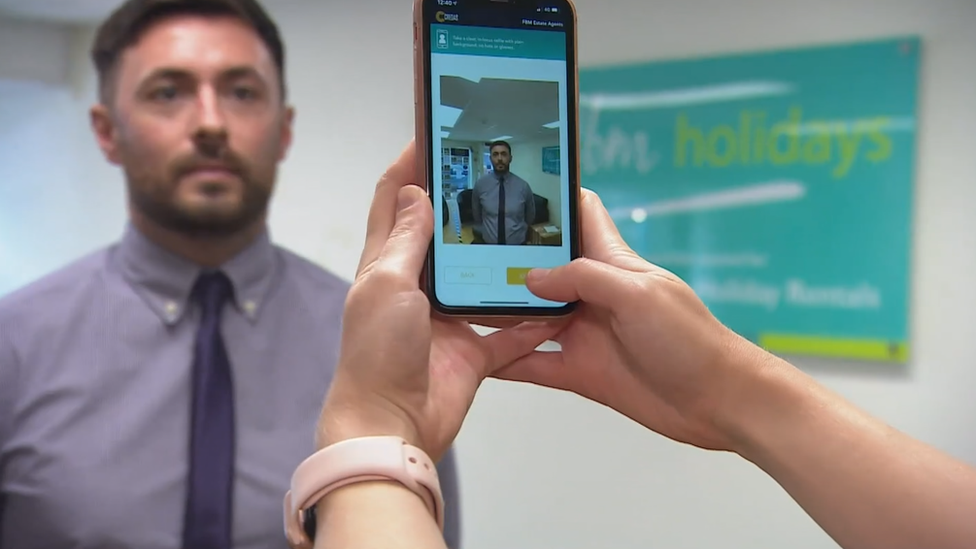

Apps can compare people's picture to that on their passport

Credas's app uses facial recognition - people take a selfie and the app compares it to a photograph of their passport to verify they are who they claim to be.

Claire Williams works for FBM estate agent in Milford Haven, Pembrokeshire, which has been using the software for the past two years.

"Before we would take people's passports or driver's licence, they would either come into the office and we would photocopy it, or we would even accept a scanned, emailed copy.

"There would be no way of knowing whether these were legitimate passports and driver's licences.

"They might have been using fake IDs, trying to launder money through the property industry - putting money into the properties, then reselling them to launder the money."

But scanning faces to confirm details for a mortgage is a very different beast to automated facial recognition, which is what was being trialled by South Wales Police - scanning faces in a crowd, often without people's knowledge.

That was ruled unlawful after a challenge by civil rights group Liberty and Ed Bridges from Cardiff.

"Real-time surveillance is considerably more complex than in the commercial space where it's a fairly static, controlled environment. But we should be adopting it and encouraging it to reduce a criminal footprint," added Mr David.

"I find it really sad that the police aren't encouraged to use technology like this to keep our country safe.

"Let's be honest, the police don't want to sell us trainers. They're not looking to capture our images or biometric footprints to sell us goods. It's to keep us safe, so the police can run very sophisticated facial matching programmes in real time to identify criminals."

The frustration was echoed by the surveillance camera commissioner, Tony Porter, who is the independent regulator appointed to oversee the use of camera systems in England and Wales.

Following the appeal court ruling on South Wales Police in August, he said he had been "fruitlessly and repeatedly" calling for an updated code the police could follow, external.

While campaigners Liberty felt the court's ruling left little room for the technology to be safely used, Mr Porter disagreed, adding: "I believe adoption of new and advancing technologies is an important element of keeping citizens safe."

He has issued new guidance, external on the use of facial recognition in light of the case, but it remains just that - guidance, not law.

It has left police forces still trying to iron out the problems raised by the Court of Appeal - the potential for gender and ethnic biases and a robust code to cover when, how and where the technology can be used, and in search of whom.

Prof Martin Innes, from the Universities' Police Sciences Institute, evaluated the rollout of automatic facial recognition for South Wales Police in 2018, flagging ethical and regulatory challenges facing forces.

"If you look back at the history of new and innovative technologies in policing this is what always happens. You have to let the law catch up a little bit and find out what matters and where the key points of regulation are," he said.

At present, different standards between the private and public sectors "could be very, very confusing," he added.

"There is a risk that these technologies get introduced almost by stealth and they start popping up everywhere."

Pembrokeshire estate agent Claire Williams now uses a facial recognition app to match faces to identity

In a way, some of that has already happened, from mobile phones that can detect your face to hi-tech doorbells

Stopping criminal harm "seems to be an equally justifiable reason" to use the technology, argued Prof Innes.

"But we need to think quite carefully about how far do we want this to go, and where is it appropriate for us to introduce these technologies in our lives.

"There are issues - but there are potentially opportunities and benefits to be gained if it can be done in the right way, as well."

The Home Office and the police say they will consider any ideas that could improve the way live facial recognition technology is used.

"We want police to use new technologies, like live facial recognition, in a way that reduces crime while maintaining public trust," said a Home Office spokesperson.

"We are working closely with the police to ensure national College of Policing guidance complies with the Court of Appeal's request to clarify how live facial recognition will be used.

"The government committed in the Home Office Biometrics Strategy to review the Surveillance Camera Code of Practice and it will be updated in due course."

- Published11 August 2020

- Published11 August 2020

- Published18 September 2017

- Published20 December 2019