Instagram criticised by judge over schoolgirl grooming chat logs

- Published

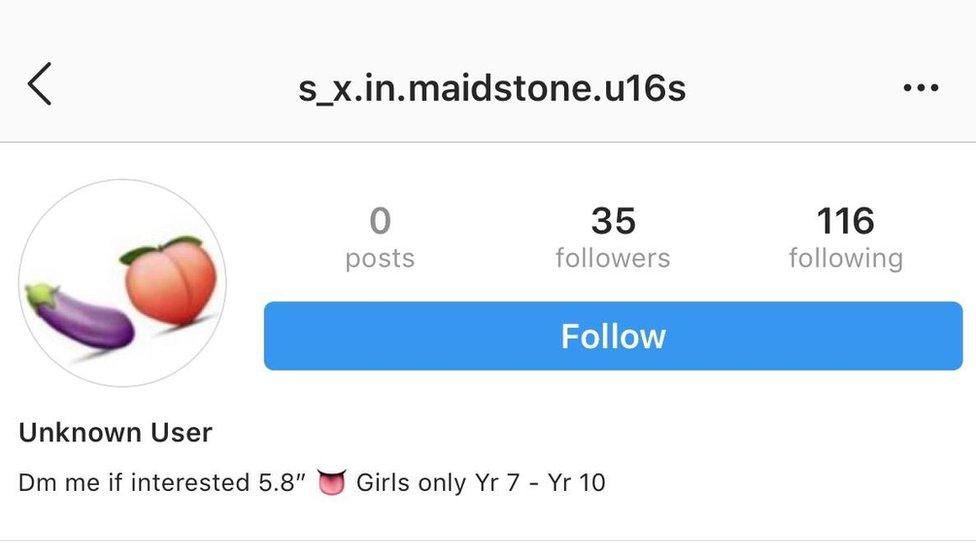

Dominic Nielen-Groen called himself Papa Bear on an Instagram hashtag used by paedophiles

A judge has criticised Instagram for "failing" to hand over chat logs between a man and a vulnerable 15-year-old girl he was grooming.

Dominic Nielen-Groen, from Wolverhampton, sent numerous messages to the girl from Seaton, Devon.

The 39-year-old was found guilty of grooming a child with the intention of committing a sexual offence and jailed at Exeter Crown Court for 18 months.

Instagram said it had blocked all associated hashtags he had used.

Computer engineer Nielen-Groen, 39, used the name Papa Bear on an Instagram community which was "widely used by paedophiles", Recorder Jonathan Barnes said.

The girl, who cannot be named for legal reasons, had more than 3,000 followers and had sent followers pictures of her body in exchange for money.

But the court also heard her online activities had a traumatic effect on her, changing her from a model student predicted to get top GCSE grades, to a persistent truant who missed more than half her final term at school.

Nielen-Groen had travelled more than 160 miles (258km) to Seaton to meet the girl in August 2018.

Police arrested him after the girl contacted police earlier in the year.

The judge criticised Instagram's failure to hand over chat logs of the conversations between Nielen-Groen and the girl.

He told the court: "Sometimes these social media companies put misplaced loyalty to their customers before the administration of justice."

He put Nielen-Groen on the sex offenders register and made him the subject of a Sexual Harm Prevention Order, which restricts his future contact with children and enables the police to monitor his use of the internet.

Instagram's owner Facebook said associated hashtags had been blocked so it would "no longer be searchable, viewable or recommendable".

It said in the past year it had "tripled the size of our safety team to 30,000 people" and worked closely with police and child protection organisations.

The firm also used technology "to proactively detect grooming and prevent child sexual exploitation on our platforms" and was "continuing to build new features and technology to find harmful content".

"Keeping young people safe on our platforms is a top priority," a statement said.

- Published28 November 2018

- Published12 October 2018

- Published28 February 2017